Translation

In 2020, some of the autonomous driving technologies were eliminated, some new technologies emerged, and some stood the test of time. I’ve sorted out the key technologies of autonomous driving and explained technical problems in plain language.

Autonomous driving system, at its core, is like a human-driver in front of the screen. Developing autonomous driving technology is essentially developing an “artificial old driver.”

The essence of all sensors in one sentence: Old drivers need a better vision

Steel Man, Musk, often stresses on the importance of first principles. There is so much intelligent driving sensors out there, is there something common to all of them? Could it spawn new sensors in the future? I agree with this view. Knowing the essence leads to predicting the future.

So what’s the essential feature of all sensory organs (human)/devices (machines)? They all receive or emit electromagnetic waves (partial mechanical waves). It sounds complicated, doesn’t it?

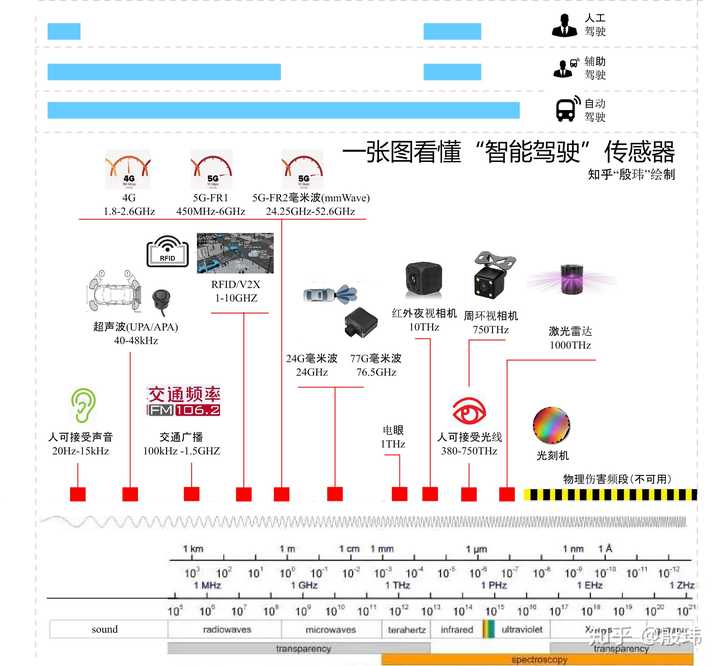

Here’s a diagram I drew to explain the concept of autonomous driving sensors. You can find a lot of similar content elsewhere, but the aim of this diagram is to make it easier to remember and understand the current state of sensors and anticipate possible changes in the future!

The term “electromagnetic wave” generally runs throughout all the sensory detectors, with the exception of those relying on gravity (inertial) mechanical waves (ultrasound) and chemistry. The different frequencies of electromagnetic waves constitute various autonomous driving sensors, such as LIDAR, millimeter-wave radar, cameras, 5G, even traffic radio, and various sensors for autonomous driving, assisted driving, and even manual driving, some of which can measure distance, some can obtain visible light, and some can carry information flow. It’s fascinating, isn’t it?

Looking at this diagram, let’s first focus on the general concept.

- (Yellow and black lines) We generally don’t discuss the electromagnetic wave frequency band it represents in autonomous driving. One reason is that frequencies in this band can harm humans, such as ultraviolet light, which is why during the pandemic, they moved babies away from ultraviolet lamp sterilization. Another reason is that this type of electromagnetic wave is extremely costly (think of our respectable Huawei, who got trapped in lithography machines). But in theory, as seen in the development of 5G and millimeter waves, the higher the frequency, the more information can be carried, and the more accurate the measurements can be.

Q. Mr. Wei, will new sensors for autonomous driving appear in this frequency band in the future?

A. It may affect your safety a bit.

Q. Why? These sensors all perceive the environment outside.

-

You may encounter a group of zombie-like expectant fathers who have mutated due to ionizing radiation on the road, chasing after you and blaming you for causing their little zombie babies to miscarry. From this perspective, it seems that you are not very safe.

-

Experienced drivers know that “visibility” is the most important thing to focus on while driving. Human driving relies mainly on vision and occasionally on hearing, as shown in the graph, with limited frequency coverage. However, autonomous driving cars can theoretically be safer because the sensors covering a wider range of frequencies. In simple terms, humans cannot see things at night, in fog, far away, or behind walls, but sensors can see all of these things. Therefore, if the information that is normally unacceptable is transformed into a way that humans can accept, such as through traffic broadcasts and assisted driving, the driving safety will be improved, which is called assisted driving. If all of this information is given to the computer to make decisions, it is called autonomous driving. Ignoring the processing of information, only considering the input of information, autonomous driving is indeed safer.

-

The lower the frequency (the closer to the left in the graph), the longer the wavelength and the broader the range of propagation, making it more suitable for communication applications. Generally speaking, the lower the frequency, the lower the cost of use, but the less information it can carry. Therefore, 1G-5G implies that the cost and technical difficulty increase. Since the cost of assisted driving is lower, it starts with ultrasound and gradually develops to millimeter waves, cameras, and lasers in stages. You may notice that ultrasound is already on most cars, more expensive models have millimeter waves and cameras, and only a few cars have lidar, also due to this reason. (Is it clear now?)

-

The higher the frequency (the closer to the right in the graph), the shorter the wavelength and the smaller the range of propagation (quick attenuation), but it can carry more information and is more favorable for distance measurement, so it is used more for local perception. Sensors near the visible light frequency band are the main source of local perception information. Here, we can summarize that different electromagnetic waves have different characteristics for environmental objects, such as interference, diffraction, and echo properties. For example, millimeter waves are sensitive to metals, while lasers are more sensitive to object surfaces, infrared is sensitive to heat, and cameras to visible light. These different characteristics can complement each other, further bringing redundancy, and fusion becomes important.

-

There are many gaps in the middle of the graph, which also leave room for emerging sensors and communication methods, and predict the opportunity for the development of new sensors. For example, “Electric Eye” (AP-Hertz receiver) is located between millimeter waves and cameras, can easily penetrate rain and fog for imaging, and makes up for the resolution of radar and the interference of lasers by rain and fog, although it is not yet mature, it can be foreseen easily. The development of 5G uses the millimeter wave frequency band, which can be clearly seen in the graph, even though it has not appeared in the past few years. This is the role of essential thinking (Is it clear now?).Summary: Based on the differences in application cost, propagation range, propagation characteristics, and information capacity, a rich and diverse branch of autonomous driving sensor technologies has emerged. (Have you thoroughly understood it?)

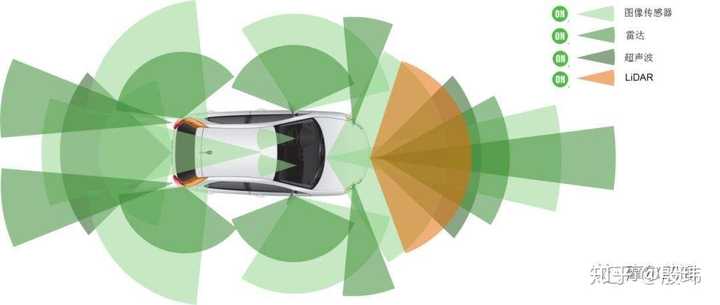

With this understanding, it is easier to remember the different sensors and their basic functions. Below is a summary of various sensors and their basic functions, which will not be elaborated on in detail here. I will synchronize the corresponding content in the column at a later stage.

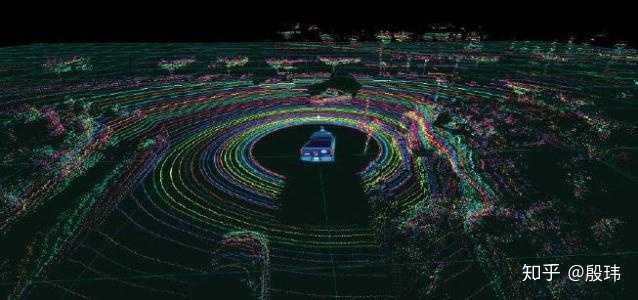

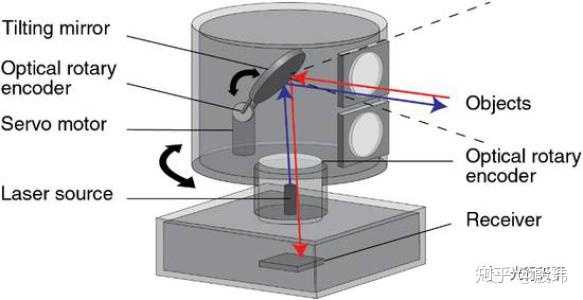

Lidar

The advantage of Lidar is that its ranging accuracy and resolution are relatively high, and it can establish a stable detection of physical size. However, its disadvantages are also apparent. The most serious is its engineering technology, although solid-state Lidar is available, the laser life is not capable of meeting the requirement of the vehicle’s warranty, and the cost also does not yet meet the requirement. Furthermore, although the precise perception distance is its advantage, it is also its disadvantage. In the case of rain, snow, and flying paper, it cannot correctly determine the attributes of objects.

Driver: On rainy days, I’ll be a more daring driver.

Lidar: Oh no, a wall in front of the car, another wall, feeling drained.

Considering the cost and performance of Lidar, it is currently widely used in L4 level autonomous driving.

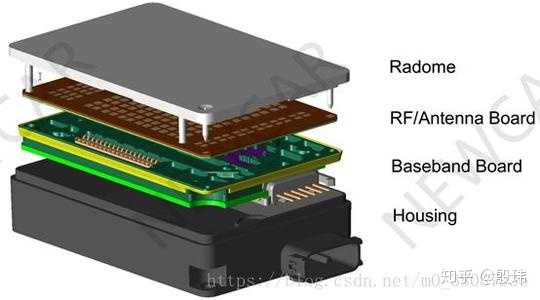

MMW radar

The MMW radar utilizes the “Doppler effect” principle. Simply put, suppose a fire truck “beeps” past you, you can determine its proximity and distance even if you close your eyes. Its advantages are that the relative speed estimation is very accurate, and distance and angle are also acceptable. It is not sensitive to rain and fog (recall the previous section on frequency bands), and as a sensor that has been in mass production, it is already widely used in mid-priced models. However, its disadvantages are also apparent. Although 4D MMW radar has been developed and deployed, its resolution still cannot catch up with that of cameras and Lidar. Moreover, being sensitive to metal is both an advantage and a disadvantage. Another feature is that because it relies on the “Doppler” effect, it cannot detect when the relative speed with an object is zero. But this feature is entirely sufficient for ADAS systems because there is no danger without relative speed.

Driver: I have driven over a manhole cover.# Radar:

“Damn, there’s a stationary vehicle, we’re going to hit it!”

Driver:

“Just passed a signpost.”

Radar:

“Damn, another stationary vehicle, you’re brave, sir!”

Driver:

“Steadily following the car ahead.”

Radar:

“Ah…finally, there’s nothing in front of us.”

Ultrasonic Radar

Ultrasonic radar is the most widely produced radar system. Although its detection distance is short (remember the frequency band, although it can propagate far, it cannot obtain sufficient echo due to strong diffraction and is too far away), it is cheap and stable overall. However, it is restricted to one-dimensional variables (distance). Almost all mass-produced cars are equipped with it, except for the Toyota Corolla. It is generally divided into UPA and APA, with UPA having a wide range and short distance, mainly used for front and rear parking (dark blue), while APA has a long range and narrow range, mainly used for side collisions. Don’t underestimate this sensor, the development of Tesla’s Autopilot system has given APA a resurgence.

Vision Camera/Infrared Camera

Iron Man’s favorite sensor is also the most talked-about and potential sensor. Because the perception of the camera is almost the same as that of humans, deep learning algorithms are also the most widely used in cameras. Currently, the technology of cameras in mass-produced cars is also very mature and inexpensive, with most of the main costs coming from software. Therefore, whoever develops good visual software can be the king of autonomous driving. If the software is not good, the camera is a poor performer. Although cameras also have problems similar to humans, they cannot handle darkness, glare, etc. very well, but their resolution advantage and implicit information theoretically can solve any problem, after all, we humans also rely on vision.

Driver:

“Do you think the girl in front of the car is pretty?”

Camera:

“What are you talking about to the traffic cone?”

Moreover, I have to mention the night vision camera which, due to its high cost, cannot yet satisfy production and large-scale deployment requirements. However, based on infrared technology, it can still function normally in dark and lightless conditions at night, although the color information may not be as large as that of traditional cameras, the potential for it is still enormous (also because it receives infrared signals emitted by objects).

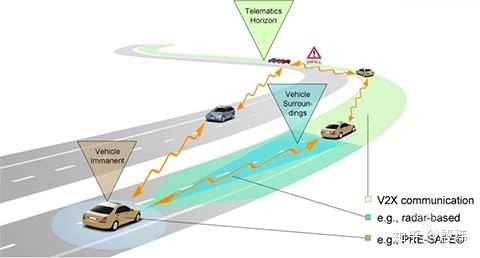

5G/V2X Maps

When it comes to discussing 5G, V2X vehicle communication, and high-precision maps, the reason for grouping them together is that, unlike other sensors, they actually belong to the category of communication and data, and the emphasis is not on the physical entities themselves, but on the information flow being transmitted. To compare this to your own driving memories (crowdsourced maps), information someone else has drawn for you (high-precision maps), or just the latest gossip (5G/V2X), these are all external information inputs, with the only difference being that the maps store static information while V2X/5G communication conveys dynamic information. The greatest advantage of these external information sources is that they may carry a huge amount of content that you cannot perceive on your own (mischievous kids with radar detectors, traffic jam information ahead, remote vehicle handling). People always say that if you enjoy hearing gossip, you will live a long life in the company, because localized perception is always limited. By obtaining this information, you can make predictions in advance, like having a good comrade behind you supporting you.

However, however, a comrade behind you can also stab you to death, right? The biggest problem with these sensors is that they are easily tampered with and intruded upon. Because the system relies heavily on external support, any vulnerability exploited can cause damage to the vehicle. Therefore, the core of vehicle network security is to respond to modifications of these sensing devices. Perception is still important, and the vehicle communication network cannot rely too heavily on external sources.

Driver: In the dark, staring at the navigation, I hit the gas pedal and flew into the river.

Map: In my memory, that was a road, but perhaps it rained too much and it turned into a river.

### GPS Receiver

### GPS Receiver

GPS is a familiar term to everyone. Without it, all the automatic driving map data cannot work properly. GPS is actually a communication system that is redeveloped for obtaining absolute positioning on the earth. It is a very large and complex system engineering. Currently, most car models and smartphones are equipped with low-cost GPS, and this sensor is also a standard configuration for L4 level autonomous driving, with no other sensors that can replace it. Autonomous driving vehicles require centimeter-level positioning to meet functional requirements, but in reality, GPS is a very fragile sensor, similar to V2X, because it is a communication rather than a physical sensing process, so it is very easy to be deceived.

Wife: You jerk, I saw your cellphone’s location, what are you doing at the massage parlor?!

Husband: For the hardworking husband, recite this: “Do you believe in GPS? I’ll give you a live video broadcast.”

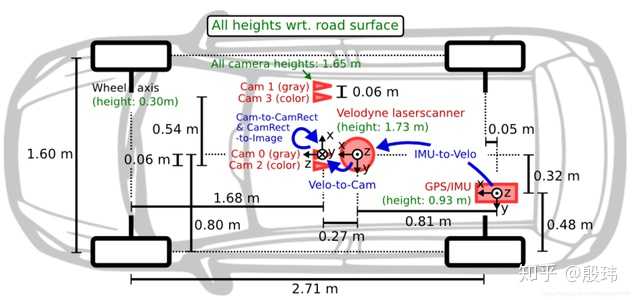

Inertial Navigation

Inertial navigation device, also known as IMU, is a special device which mainly measures the relative positional change (position and heading) of an object through inertial measurement (MEMS inertial measurement) or optical phase interference (fiber-optic inertial measurement). This sensor is similar to the cerebellum in the human body, which affects all the aforementioned sensors. All sensors have a running time interval. During this interval, there is no perceived input. However, the perception of relative position of the vehicle can help all perceptual information deduce short-range distance and extend the role of all sensors in the time dimension. And because it is not affected by external environment interference at all (unlike other sensors), it is a well-deserved first assistant in the sensors team.

The Dongfeng missile typically has a high-cost fiber-optic inertial guidance system which can hit the target even when blinded and crazily rushed towards the goal, regardless of whether you interfere with GPS or change the environment.

Summary# Perception Part One

The core of this section can be summed up in one sentence: an intelligent entity perceives the world through receiving and emitting different electromagnetic waves, providing self-driving cars with more information than humans have, making autonomous vehicles safer in the process. However, because some sensors are reliable while others are not, sensor fusion is crucial. That leads us to the topic of software-defined cars, where sensors are connected to a physical brain (domain controller) to enable autonomous vehicles to think. We will also discuss the specific technologies that bring this thinking to life.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.