“Intelligent Driving Strategy Upgrade Conference” Held by Great Wall Motors in Beijing on December 30, 2020

This picture summarizes the planning goals and approximate timing of Great Wall Motors’ intelligent driving strategy.

Zhang Kai, head of intelligent driving at Great Wall Motors, revealed at the conference that Coffee Intelligence Driving will be first installed on WEY brand flagship models, and is expected to be released in early 2021. In 2021, WEY brand will gradually install Coffee Intelligence Driving, and in the second half of 2021, other Great Wall Motors brand models will also gradually install Coffee Intelligence Driving.

After summarizing this table, I asked my colleagues in the group what they thought.

“Is it so difficult to have the first LIDAR with NOH capability? What is this L3 concept?”

“Another pie in the sky.”

“Other companies have spent so long and still can’t get it right, but Great Wall can do it as soon as they start?”

“Making something like this is not difficult, L3 and L4 are both vague concepts, the key is how’s the experience?”

“Never mind Tesla, isn’t it too easy for Great Wall to surpass Mobileye?”

These are all our doubts.

Fortunately, at the conference, Great Wall not only announced their hardware planning in intelligent driving, but also introduced the logic behind their intelligent driving creation.

While everyone was skeptical, I tried first to restore the thoughts behind the engineers at Great Wall Motors during the conference.

Right Idea

“To be a leader in smart driving in the intelligent era, we need to focus on three dimensions: safety, scenarios, and user experience. Let me explain one by one,” said Zhen Longbao, director of intelligent driving at Great Wall Motors, at the beginning.

After hearing the words “scenarios” and “user experience”, I was pleasantly surprised that “user experience” was already mentioned as one of the most important positions in this technical conference.

What surprised me even more was that Zhen Longbao did not mention “L3” and “L4” at all in the introduction of the “scenario” environment.

For Great Wall Motors, being able to break away from the engineer mindset and abandon these “legal” aspects of the scenario, and return to the “user” level of the scenario, is considered the first step in the right direction.

At the conference, Zhen Longbao introduced: “We use the organic combination of dynamic, natural, and static environments to construct the scene system of Coffee Intelligence Driving with a 5-level structure, each level is composed of countless subdivided dimensions.”## 5 Layers of Environment and User Experience

The engineers have divided the external driving environment into 5 layers to abstract and combine all the possible scenarios that we might encounter while driving. By expanding the dimension units in each layer and defining features more precisely, more and more scenarios can be combined to learn new segmented scenes. Ultimately, all scenarios can be converged through simple responses such as normal, slowing down, stopping, changing lanes, and overtaking.

Currently, Great Wall Motors has developed multiple scenes, such as passing vehicles, avoiding cones, driving in limited speed areas, passing toll stations, changing lanes with avoidance, changing lanes with multiple vehicles, and autonomous driving in urban areas.

Through this approach, the logic of assisted driving is designed to solve problems for users during the driving process, rather than being limited to achieving L3 autonomous driving in specific complex scenarios.

Next year, the WEY brand will introduce the Navigation On Highway pilot (NOH) function, similar to Tesla’s NoA and NIO’s NOP. Great Wall Motors is the first traditional automaker to offer such a function.

User Experience and 5 Cost Indicators

Great Wall Motors measures the user experience with five cost indicators:

-

Operational cost

-

Cognitive cost

-

Safety cost

-

Time cost

-

Financial cost

These cost indicators guarantee the overall subjective experience during use. For example, to ensure operational cost, it is important to have a convenient way to activate and monitor the assistant driving function without distracting the driver. To reduce the cognitive cost, a good interface design is needed for visibility and comfortable lateral and longitudinal control. And to minimize time cost, an efficient acceleration, deceleration and lane-changing strategy is required.

If Great Wall Motors can deliver on these five cost indicators, the user experience will be very positive. During the launch event, different driving modes were also demonstrated.

However, the success of this assisted driving system depends on both hardware and technology.

Redundancy and Safety

Engineering safety is the most important factor in building confidence in assisted driving. To achieve this goal, Great Wall Motors has implemented redundancy in senses, control, architecture, power, braking, and steering. They did not emphasize the hardware and instead highlighted the importance of safety.### The Most Advanced LiDAR?

The hardware configuration of Coffee Intelligence Drive at the perception level is as follows:

- LiDAR x3

- MMW radar x8

- Camera x8

- Ultrasonic radar x12

- HDMap

- V2X

This is the richest perception hardware configuration that you can see in non-fully automated driving models.

Based on 8 cameras and 8 MMW radars, it can achieve 360-degree vision and radar coverage around the vehicle.

At the front of the vehicle, Coffee Intelligence Drive also has 3 LiDARs to assist cameras and MMW radars to achieve more powerful perception capabilities.

The LiDAR used by Great Wall Motors is a customized solid-state LiDAR jointly developed with IBEO, a German company, which adopts FLASH technology and flash principle, with angular resolution of 0.05°x0.07° and distance resolution of 0.05m.

The LiDAR can detect obstacles within a range of 130 meters, and can promptly detect objects on the ground such as tires and popsicle sticks, which can give the vehicle at least 4 seconds of decision-making and planning time compared to ordinary MMW radars.

If calculated at a speed of 100 km/h, 4 seconds means a driving distance of 111 meters.

The experience improvement brought by this is that the system can more calmly change lanes to avoid obstacles when facing popsicle sticks guiding the lane change at high speeds.

At the end of 2020, LiDAR has gradually become a perception hardware that all auto companies are competing to equip.

At the 2020 Guangzhou Auto Show, XPeng Motors announced first that the next model will be equipped with LiDAR as an auxiliary driving perception hardware.

On December 22, at a media briefing in Haikou, Qin Lihong, the co-founder of NIO, said, “Now many brands are talking about LiDAR, but their LiDAR is weak. Everyone will see NIO’s LiDAR on January 9th.”

Li Xiang, the founder of Li Auto, has also mentioned many times in public that LiDAR and HDMap are necessary for advanced automated driving assistance.Although Tesla, the leader in the industry, has made it clear that they will not use LiDAR and rely on vision alone to achieve fully automated driving, Tesla’s confidence comes from its super-strong visual perception algorithm.

Without enough experience and research and development capabilities, borrowing from LiDAR is also a good choice.

So, now that the perception hardware is in place, what about the computing power?

360T-720T

At the press conference, Great Wall Motors announced that Coffee Intelligence Drive will use Qualcomm’s chipset to build a domain controller for advanced autonomous driving systems. The controller is based on the Qualcomm Ride 8540 + 9000 chip, and the computing power of this platform can be expanded from 30 Tops to 700 Tops, while the total power consumption of the system is only 130 W.

This means that the energy efficiency of this chip has reached an astonishing 5.4 Tops/W. What concept is this? The widely known Mobileye Eye Q4 has 0.83 Tops/W, and Mobileye Eye Q5 has 2.4 Tops/W.

However, Qualcomm revealed at CES in January that this platform will be supplied to major customers in the first half of 2020, and is expected to be put into mass production in 2023.

So in reality, the time we can experience it is still a question mark.

In addition, here are 5 slides of Coffee Intelligence Drive’s control, architecture, power supply, braking, and steering redundancy design.

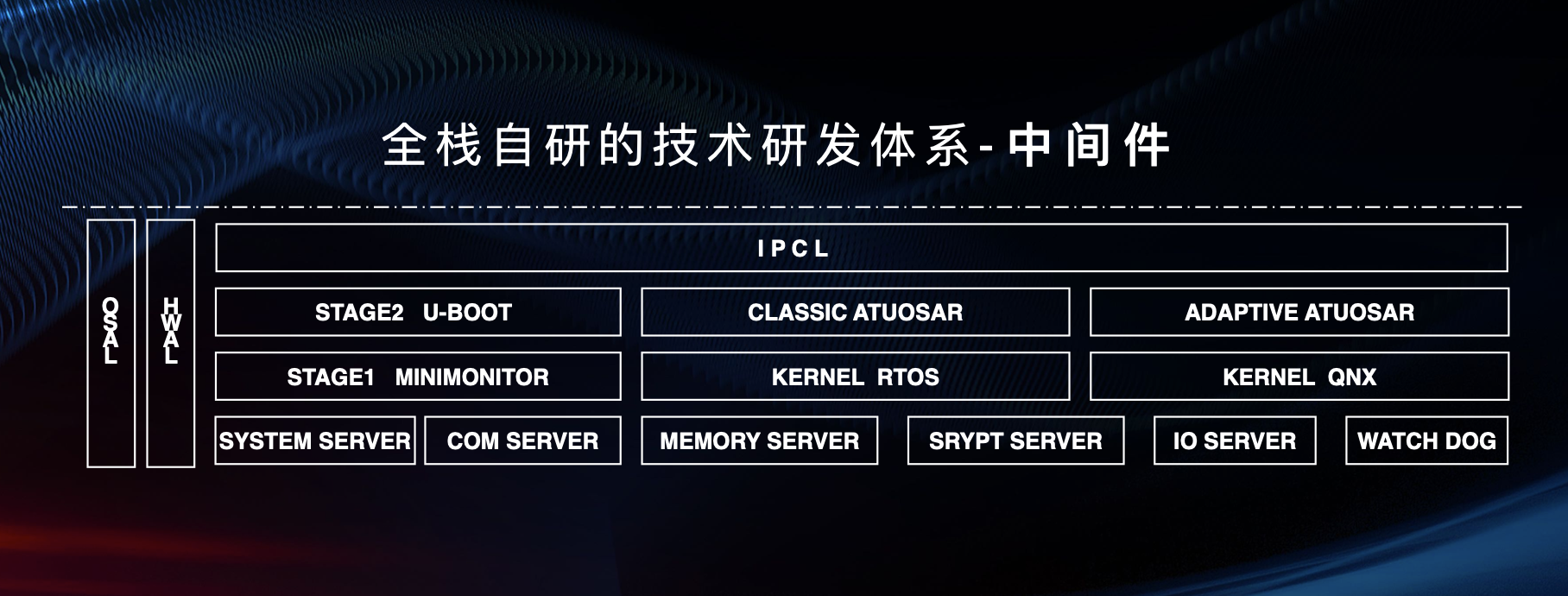

We are all self-developed except for the OS and the chip.

Lastly, the technology.

This is also the biggest question we have for this press conference.

In the presentation by Zhang Kai, the head of intelligent driving at Great Wall Motors, the most frequently mentioned word is “self-developed”.

Zhang Kai said, “We have independently developed full-stack technology for intelligent driving, and we have also adopted a self-developed mode for our autonomous driving controllers and middleware systems. We can say that we have done everything except for the OS and the chips. We have completed the engineering deployment of automated driving perception, fusion, prediction, and planning decision-making software and have already completed road tests for millions of kilometers.“

Yes, you heard it right. From perception, fusion, prediction, to planning decision-making, Great Wall plans to do it all by themselves.

Whether to self-develop or not, there is no absolute right or wrong. The key is whether it’s suitable for oneself.

After choosing the “self-develop” path, it means that Great Wall wants to take more control into their own hands, but it also puts extremely high requirements on the company’s R&D.

Tesla launched Autopilot 1.0 in 2014 and went through six years of rapid iteration, accumulating data from millions of vehicles, and finally achieved its current state through the unremitting efforts of numerous Silicon Valley talents.

According to Great Wall’s introduction, the intelligent R&D team will expand to 2,000 people by 2021.

I do not deny the experience and talents that Great Wall has accumulated over the years, but in the face of this new field, Great Wall’s previous experience may not be completely applicable. Moreover, Great Wall’s current R&D system does not have people with Andrej Karpathy’s level of expertise in Tesla.

At least, from the results so far, at the end of 2020, Great Wall still does not have a highly capable assisted driving feature for the mass market. How can we believe that Great Wall can come up with an amazing product in the first half of 2021?

Final Thoughts:

Wang Xing once said that China’s automotive industry will eventually become a pattern of three state-owned enterprises, three private enterprises, and three emerging automakers.

Half a month ago, Great Wall officially released the Lemon Hybrid DHT platform, and half a month later, it announced its intelligent driving strategy, filling in the last piece of the technology puzzle for Great Wall to face the new era.At the end of 2020, whether it is Geely, BYD, or Great Wall Motor, they have all started to publicly announce their plans for future development in intelligent and electrified vehicles. From the content of the press conference, Great Wall Motor has developed a powerful set of autonomous driving assistance functions, which has raised expectations. Now it remains to be seen how these products will be put into practice.

The road to reform is difficult and long.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.