In my previous sharing, I introduced the golden combination of GPS and IMU, which can achieve stable positioning for urban road autonomous driving and solve the first big problem – “where am I?”

To enable unmanned vehicles to behave like humans and slow down or stop when encountering obstacles or red lights, and accelerate or continue driving when encountering green lights or unobstructed roads, sensor perception of the surrounding environment is necessary.

There are currently four types of sensors applied to unmanned vehicles, namely cameras, LiDAR, millimeter-wave radar, and ultrasonic radar. Different sensors are placed in different positions on the vehicle based on their sensing characteristics.

Today, I will introduce the camera on unmanned vehicles in detail using the camera and its module open-sourced by Baidu Apollo 2.0 as an example.

Below is the recommended camera LI-USB30-AR023ZWDR for Baidu Apollo 2.0:

Apollo 2.0 uses two identical cameras which are connected to the controller via a USB 3.0 adapter, and their color image information is transmitted. The focal length of the two cameras’ lenses is 6mm and 25mm respectively, used to detect red and green lights at close and far distances, respectively.

Camera Classification

Cameras are mainly classified into four types according to their lens and placement: single camera, stereo camera, triple camera, and panoramic camera.

Single camera

The single-camera module contains only one camera and one lens.

As many image algorithms are developed based on single cameras, the algorithm maturity of single cameras is higher than that of other types of cameras.

However, single cameras have two inherent defects.

The first is that their field of view depends entirely on the lens. A short focal length lens has a wide field of view but lacks distant information. The opposite is true. Therefore, a moderate focal length lens is usually selected for a single camera.

The second is that the accuracy of monocular ranging is lower.# Triocular Camera

Due to the limitations of monocular and binocular cameras, the widely used camera solution in unmanned driving is the triocular camera. The triocular camera is actually a combination of three single cameras with different focal lengths. The following is an image of the triocular camera installed under the windshield of the Tesla AutoPilot 2.0.

According to the different focal lengths, the range perceived by each camera is different.

Stereo Camera

The image produced by a camera is a perspective projection, where distant objects are imaged smaller. Objects closer to the camera require describing hundreds or even thousands of pixels, while the same object in the distance may only need a few pixels to be described. This characteristic causes the farther away the object, the larger the distance represented by a single pixel. Therefore, for monocular cameras, the farther away the object, the lower the accuracy of distance measurement.

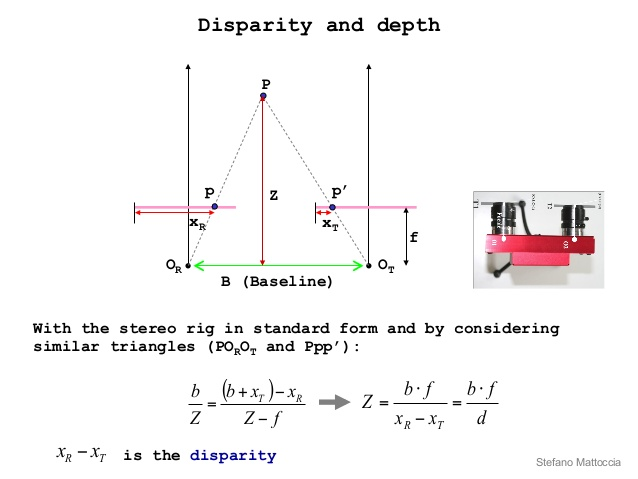

To overcome the limitations of monocular cameras, stereo cameras were born. When two cameras close to each other shoot an object, the pixel offset of the same object on the imaging plane of the cameras can be obtained. With information such as pixel offset, camera focal length, and actual distance between the two cameras, the distance of the object can be obtained by mathematical calculation. The following is a schematic diagram.

Image source: https://www.slideshare.net/DngNguyn43/stereo-vision-42147593

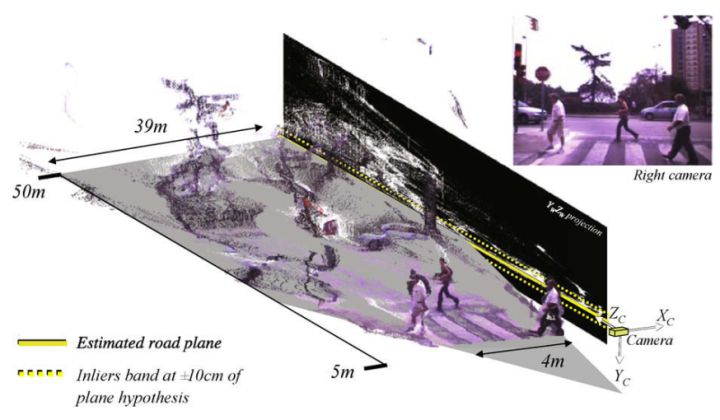

When the principle of stereo vision is applied to each pixel of the image, the depth information of the image can be obtained, as shown in the following figure.

Image source: Computer Vision and Image Understanding “2D-3D-based on-board pedestrian detection system”

The addition of depth information not only facilitates the classification of obstacles but also improves the accuracy of high-precision map positioning and matching.

Although stereo cameras can obtain higher-precision distance measurement results and provide image segmentation capabilities, like monocular cameras, the field of view of the lens depends entirely on the lens. In addition, the principle of stereo vision requires more requirements for the installation position and distance of the two lenses, which can cause trouble for camera calibration.The perception range of the Tesla AutoPilot 2.0 visual sensor for the three cameras is as follows, from far to near: the front narrow-view camera with a perception range of up to 250 meters, the front main-view camera with a perception range of up to 150 meters, and the front wide-view camera with a perception range of up to 60 meters.

For cameras, the perception range is either compromised in terms of distance or field of view. Three-camera systems can better address this issue, and they are widely used in the industry.

But what about ranging accuracy?

Because each camera in a three-camera system has a different field of view, the wide-view camera is used for near-distance ranging, the main-view camera for medium-distance ranging, and the narrow-view camera for further distance ranging. This way, each camera can play to its strengths.

The downside of three-camera systems is that all three cameras need to be calibrated, which requires more work. Additionally, the software needs to process data from all three cameras, which requires high algorithmic accuracy.

Surround View Camera

The lenses used in the previously mentioned cameras were non-fisheye lenses. The surround view camera, on the other hand, uses a fisheye lens and is installed facing downward. Some high-end models feature a “360° panorama display” function, which uses the surround view camera.

Four fisheye lenses are installed at the front, left and right side mirrors, and rear of the vehicle to capture images. The captured images are similar to the one shown in the picture below. Fisheye cameras obtain a sufficiently large field of view at the cost of severe image distortion.

Image source: https://www.aliexpress.com/item/360-bird-View-Car-DVR-record-system-with-4HD-rear-backup-front-side-view-camera-for/32443455018.html

Through calibration, an image projection transformation can be performed to restore the image to a top-down view. The four direction images are then stitched together, with a top-down view of the car added in the middle to achieve the desired effect – a view from above looking downward, as shown in the picture below.

The perception range of the surround-view camera is not large, mainly used for obstacle detection within 5-10 meters of the vehicle and parking line recognition during autonomous parking.

The perception range of the surround-view camera is not large, mainly used for obstacle detection within 5-10 meters of the vehicle and parking line recognition during autonomous parking.

Camera Functions

There are two main functions of cameras used in autonomous vehicles. The first is perception, followed by positioning.

Perception

In the field of autonomous driving, the main function of cameras is to realize the perception of various environmental information. I will use Mobileye as an example to introduce the functions that cameras can achieve. Mobileye is one of the internationally recognized companies that do vision best. Let’s start with a video.

Mobileye Vision Monitoring Results

If the traffic is too slow, let’s just look at the pictures.

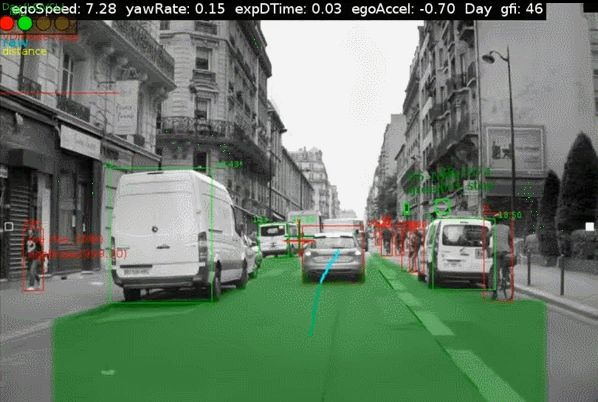

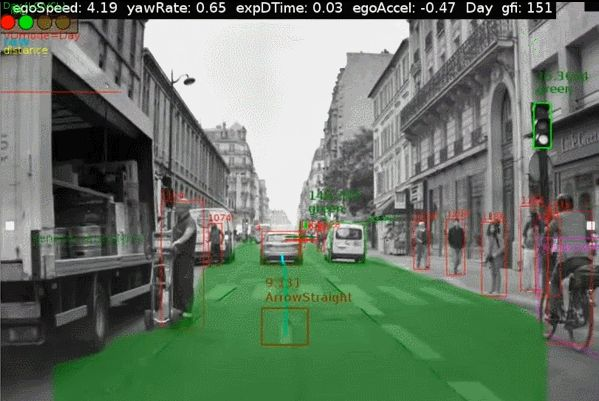

It can be seen that the perception ability that the camera can provide includes:

① Lane

The dark green line in the picture. Lane is the most basic information that cameras can perceive. The lane-keeping function on the highway can be achieved with the lane detection function.

② Obstacle

The object enclosed in the rectangle in the picture. In the picture, only objects such as cars, pedestrians, and bicycles are shown. In fact, the types of obstacles that can be detected can be more diverse, such as motorcycles, trucks, and even animals. With obstacle information, the autonomous vehicle can complete following driving within the lane.

③ Traffic Sign and Road SignThe objects enclosed by green or red rectangular boxes in the figure are the perceptual information that is mainly used to match with high-precision maps for assisted positioning. Of course, based on these perceptual results, the map can also be updated.

④FreeSpace

The area covered by transparent green in the figure represents the area where autonomous vehicles can travel normally. The FreeSpace allows vehicles to not be limited to lane travel and enables more overtaking functions across lanes, making driving more like experienced drivers.

⑤Traffic Light

The object enclosed by the green box in the figure. The ability to perceive the state of traffic lights is essential for autonomous vehicles driving in urban areas, and it is also why the “simple road automatic driving” function must be open in Baidu Apollo 2.0.

Positioning Capability

I believe everyone has heard of visual SLAM technology. Based on pre-built maps and real-time perceptual results, visual SLAM matches and obtains the current position of the autonomous vehicle. The biggest problem that visual SLAM needs to solve is that the map’s capacity is too large. For a slightly larger area, it requires high disk capacity. How to create sufficiently lightweight maps becomes a key factor in the commercialization of SLAM technology.

Mobileye has implemented the Road Experience Management (REM) function, which can achieve global positioning capability for complex road conditions. Check out the video below for a demonstration.

REM Demonstration_Tencent Video

Summary

Currently, Baidu Apollo 2.0 only opens the camera’s ability to detect traffic lights, and obstacle perception still heavily relies on lidar and millimeter-wave radar. It is believed that these functions introduced in the article will gradually be opened in the future.

The camera is the strongest sensory capability among all vehicle-mounted sensors. This is also why Tesla insists on using a pure visual perception solution and does not use lidar (of course, the high cost of lidar is also a factor).

Well, this article basically lets everyone understand the visual perception technology used in autonomous vehicles.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.