This article is reproduced from Jianyue Cheping (WeChat ID: jianyuecheping), written by Su Qingtao.

Just like the ban on Tesla five years ago, Mobileye still fiercely guards against its customers today – they do not want their clients to master perception algorithm capabilities, and if they see any signs of a customer developing their own algorithm, even if it’s just a misunderstanding, they will threaten to cut off supply.

Although competition in the autonomous driving chip market is becoming increasingly fierce, particularly with the emergence of Huawei and Qualcomm, automakers now have more choices and Mobileye can no longer return to its previous monopoly. However, this company’s strong stance has not changed.

Under these circumstances, any self-motivated automaker is unlikely to continue to be content with Mobileye’s “black box”. But the problem is that “algorithmic autonomy” sounds ideal, but the reality is very dry.

Even Tesla, which has the strongest autonomous driving capability among automakers, replaced Mobileye’s EyeQ3 with Nvidia’s GPU and adopted self-developed algorithms at the end of 2016, and AEB was also “functionally downgraded” for a period of time.

Today, many Chinese automakers have equipped production cars with sensors and computing platforms that meet specific autonomous driving requirements, but algorithms still have to wait more than a year to be updated via OTA, indicating that algorithm challenges are even greater than computational challenges.

As Wang Gang, head of Alibaba DAMO Academy’s autonomous driving lab, said in a recent sharing session: “The challenge of computational power is ‘good or bad’, while the challenge of algorithms is ‘can or cannot’.”

But in the future, automakers who are neither willing to be “kidnapped” by Mobileye’s integrated hardware and software solution nor able to use Xavier due to immature algorithmic capabilities can breathe a sigh of relief. Because, there is a “chip company that understands algorithms the best” to help them build their own algorithmic capabilities.

In the past two years, this chip company has successively received strategic investments from two of the world’s top three semiconductor companies, and Bosch has also become one of its first partners. With the rise of this company, Chinese automakers are expected to be freed from the clutches of international autonomous driving chip makers.

A new Moore’s Law is emerging

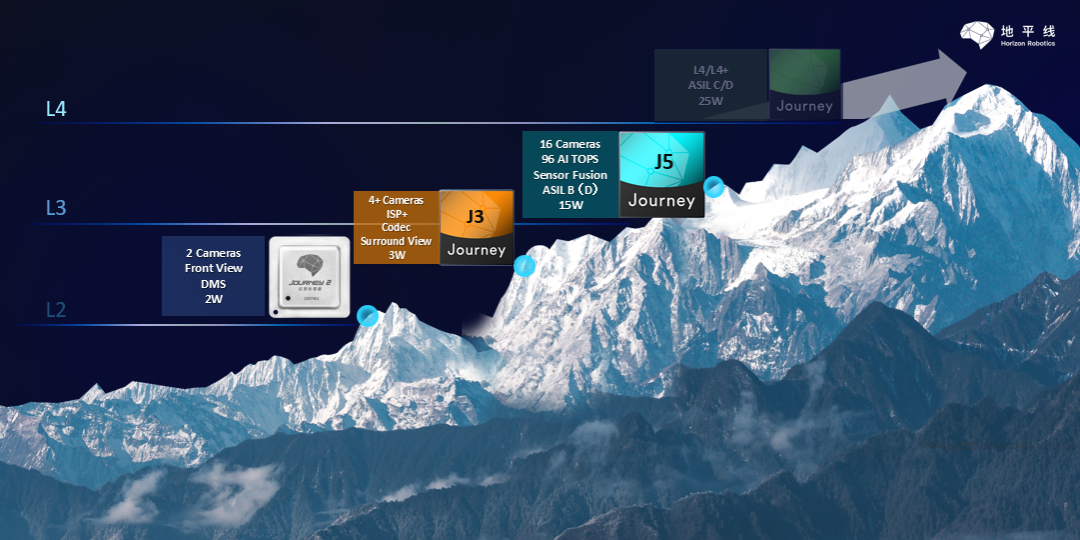

Computational power and power consumption are the two main parameters that autonomous driving chip users are most concerned about, and since its inception in 2015, this chip company has made “strong computational power and low power consumption” the most critical indicators for AI chips. Looking at their products that have been mass-produced and released, they have indeed achieved this.The company released its second-generation AI chip in the second half of last year. The computational power per unit power consumption of this chip is not only far higher than the currently most popular ADAS chips EyeQ3 and EyeQ4, but also exceeds Nvidia’s Xavier. Currently, this chip has passed the vehicle regulation certification and has been applied to front-loading mass-produced cars.

The company’s third-generation chip has been successfully taped out in the first half of this year. In the second half of this year, the company will release its next-generation AI chip, which has 1.33 times the computing power of the Tesla FSD chip, but with only 2/3 of its power consumption. This translates to its energy efficiency being twice that of the Tesla FSD chip.

It is very difficult to achieve both strong computing power and low power consumption. In the words of the founder of this company, this is like “making the horse run fast but also eat less grass.” To achieve a balance between the two, the company did not do general-purpose chips like GPUs and FPGAs, but instead made ASIC chips specifically for autonomous driving scenarios.

Simply put, compared to general-purpose chips, the architecture of ASIC chips eliminates modules that are not related to autonomous driving algorithm – this is what Jim Keller did when designing the FSD chip for Tesla.

For example, there is a lot of redundant information in image processing that is not important, and this company’s algorithm design “ignores” the interference image information that is not related to autonomous driving. This reduces the computational burden of the processor and saves power consumption as well.

Of course, only teams with very strong algorithmic capabilities can achieve this.

In combination with efficient algorithms, the company’s second-generation AI chip can provide more than 4 TOPS of equivalent computing power at a typical power consumption of 2W. Test results show that the computing power utilization rate achieved by this chip when running on typical algorithm models exceeds 90%, far exceeding other chips (usually, the computing power utilization rate can only reach about 50%).

Currently, the company’s chip architecture is also in rapid iteration.

As the chip process approaches the physical limit, the view that “Moore’s Law is dead” echoes every once in a while. In response to this voice, the founder of the company has repeatedly emphasized that although Moore’s Law is indeed slowing down, a new Moore’s Law is emerging – redefining the architecture of software combined with changes in application scenarios, and then redesigning the hardware architecture to improve computing power while reducing the power consumption per unit of computing power.

By now, many readers may have already guessed which company “this company” refers to since it hasn’t been referred to by its name. Your guess is correct. This “the most algorithmic understanding chip company” is Horizon Robotics.

Currently, the intelligent driving solution equipped with Horizon Journey 2 has won multiple orders for pre-installation. The autonomous driving computing platform, Matrix 2 (which can process data from 12 cameras), equipped with Horizon Journey 2, has also been deployed in thousands of Robotaxi vehicles.

Currently, the intelligent driving solution equipped with Horizon Journey 2 has won multiple orders for pre-installation. The autonomous driving computing platform, Matrix 2 (which can process data from 12 cameras), equipped with Horizon Journey 2, has also been deployed in thousands of Robotaxi vehicles.

Previously, the AI chip on the Matrix 1 platform was FPGA. When upgrading to the Matrix 2 platform, only the FPGA was replaced with Horizon Journey 2, the algorithm remained the same, but the power consumption dropped to within 30W from 110W.

The Horizon Journey series from Horizon Robotics not only has higher energy efficiency than FPGA, but also has significant advantages in energy efficiency compared to other known autonomous driving chips.

Based on the same 28nm process, the energy efficiency of Horizon Journey 2 (2 TOPS/W) is 2.4 times that of EyeQ4. Also, compared with the more advanced 16 nm process Nvidia Xavier (1 TOPS/W), there is still a significant energy efficiency advantage for Horizon Journey 2. In addition, it is understood that the energy efficiency of Horizon Journey 3 is also better than Nvidia Orin (3 TOPS/W), which is based on the 7nm process.

In addition to process, architecture is another important factor affecting chip power consumption. The more advanced the architecture of the chip, the higher its energy efficiency at the same process. We found that the energy efficiency of Horizon Journey series products is not only better than competing products under the same process, but may also be better than competing products under more advanced processes.

This means that the chip architecture of Horizon Robotics does have significant advantages in reducing the power consumption of computing power. Of course, Horizon Robotics can continuously optimize the chip architecture thanks to their deep accumulation in the algorithm field and their understanding of the application scenarios.

The Horizon Robotics team has set a unique algorithm model for autonomous driving scenarios and proactively integrated its computing characteristics into the chip architecture design, so that AI chips can always maintain a high utilization rate with the development of algorithms and truly benefit from algorithm innovation.

The Chip Company that Knows Autonomous Driving Algorithm Best

Perception algorithm is the starting point and cornerstone of autonomous driving. As autonomous driving continues to develop to higher levels, the requirements for the accuracy of perception algorithms have also significantly increased. How to continuously improve the accuracy of perception algorithms and challenge the limits of perception has become a subject of continuous research and exploration in academia and industry.

Horizon Robotics, although a chip company, has deep accumulation in the field of perception algorithms. In fact, since its establishment, Horizon Robotics has insisted on the route of “chip + algorithm” integration. Its core team members also have strong algorithm genes.Founder Yu Kai led his team to win the first place in the inaugural ImageNet image recognition evaluation more than a decade ago, making him the first Chinese scholar to lead a team to win the championship in an international artificial intelligence algorithm competition.

Yu Kai is also the founder of a major internet company’s self-driving business. During his work in deep learning training, he found that only a dedicated chip designed to work in high coordination with the algorithm could maximize the performance of the algorithm. Therefore, he founded the current company.

The company’s VP of algorithms, Huang Chang, has long been engaged in research on computer vision, machine learning, pattern recognition, and information retrieval. As a well-known expert in the academic and industrial fields, his papers have been cited more than 3,000 times, and he holds multiple international patents.

Huang Chang’s face detection technology created the world’s first successful example of computer vision technology being widely used, accounting for 80% of the digital camera market and being adopted by many image management software such as Apple’s iPhoto.

Since its establishment, the company has won many world championships in international authoritative algorithm evaluations such as KITTI, Pascal VOC, FDDB, LFW, and TRECVID.

Recently, in a self-driving challenge that focused on evaluating perceptual algorithms, Horizon Robotics surpassed the top teams of self-driving, including Google Brain, Alibaba DAMO Academy, TuSimple, Berlin Polytechnic University, and the University of California, Berkeley, achieving 4 first places and 1 second place in 5 contests.

The story starts a year ago: on June 17, 2019, at CVPR 2019, Waymo publicly released a new self-driving dataset that includes 3,000 driving records, 600,000 frames, approximately 25 million 3D boundary boxes, 22 million 2D boundary boxes, and diverse autonomous driving scenarios.

According to plan, this open dataset will be used in external academic research and experimental testing. Waymo’s goal is to seek a new technological model that exceeds its own technical standards through collective brainstorming.

In March 2020, Waymo announced that it would expand the open dataset by another 800 sub-markets and invite the world’s top self-driving R&D teams from academia and industry to participate in its open dataset challenge for the first time. Some of Waymo’s own employees also participated in the competition as individuals.For this challenge, Waymo has opened up more than 10 million miles of autonomous driving data collected in 25 cities, covering various urban and suburban environments in different weather conditions such as day and night, dawn and dusk, and sunny and rainy weather. This data exceeded the complexity of public datasets such as KITTI, NuScenes, and Argo, considering that Waymo’s test vehicles are equipped with five lidars and five front and side cameras.

According to Drago Anguelov, chief scientist and project leader at Waymo, the dataset used in this challenge is one of the largest, most diverse, and richest autonomous driving datasets ever in history.

Thanks to long-term accumulation of technical know-how in the algorithm field and experience in autonomous driving research and development, Horizon Robotics team made use of its well-established algorithm development infrastructure, independently developed new and effective perception algorithms, optimized algorithms for specific segmented scenarios within the dataset, and solved multiple technical challenges in a short period of time during this challenge.

The challenge started on March 19th and ended on May 31st, 2020. According to the results announced on June 15th at the CVPR 2020 Autonomous Driving Workshop, Horizon Robotics won the top spot in 2D tracking, 3D detection, 3D tracking, and domain adaptation (four challenges), and second place in 2D detection.

Horizon Robotics boasts itself as “the smartest chip company in algorithms,” and this challenge result is the best proof. In addition, Yu Kai, the founder of Horizon Robotics, has repeatedly emphasized a point that AI computing is the “algorithm defines chips”. Which means that what functions the chip can achieve and how much its efficiency can be exerted are largely determined by the algorithm. This means that Horizon’s algorithmic capabilities will also contribute to its chip products.

The “scene determines algorithm” is behind the “AI computing — algorithm defines chip.” The competition of algorithm capabilities among developers of autonomous driving technology ultimately boils down to the competition of scene perception capabilities. Without a deep understanding of scene perception, it is difficult to write algorithms that work really well. Moreover, even if a company’s autonomous driving algorithm has been proven to be “impressive” in one scene, it may not be as effective in another scene.As a major player in self-driving cars industry, any setbacks will be inevitably magnified when introducing autonomous driving solutions to different cities or countries; challenges only augmented when a foreign-developed solution encounters unfamiliar and more heterogeneous terrains on the other side of the planet, as demonstrated by the fact that autonomous driving systems which perform well in cities such as Phoenix, may completely fail in San Francisco.

At an ADAS forum in December 2019, an ADAS project manager from an automaker pointed out that many ADAS systems installed on European cars perform exceptionally well during tests in Europe, but fail miserably when exported to China, resulting in poor user experience, which then results in the systems being under-utilized.

Both Mobileye and Tesla have learned the lesson well. Their autonomous driving algorithms are both trained based on the road conditions in Europe and North America, but frequently fail in China. Dr. Guo Jishun from Guangzhou Automobile Research Institute revealed in Automotive News:

“Fishbone lines frequently seen on hills in South China are a traffic sign used in China to remind drivers to keep their attention on complex roads. However, when it comes to intelligent driving development, the lane recognition algorithm generated by EyeQ3 becomes highly unstable when encountering fishbone lines, resulting in a series of unstable functions.”

“Furthermore, many of China’s high-speed bridge limit signs, such as the 30t signs, have no international standards, therefore, the Mobileye algorithm would easily miss the small ‘t’ and lead to the recognition result being mixed up with speed limit data. For example, when driving at a speed of 120 km/h on a high-speed motorway, crossing a bridge with a 30t load limit sign would cause the system to easily misunderstand the ’30t’ as a speed limit of 30 km/h.”

Tesla’s autonomous driving system has long been criticized for failing to identify some obstacles on Chinese roads, such as the “ditch head” and “stone pier.” In addition, Tesla’s AEB pedestrian detection ability has been evaluated negatively:

In the “AEB pedestrian collision test,” NIO ES6 and Ideal One can automatically decelerate and avoid pedestrians when driving at a speed of 60 km/h; In contrast, Model 3 showed no intention of slowing down when driving at a speed of 40 km/h, and when it encountered the “ghost head” at a speed of 30 km/h, it also hit the obstacle.

The reason for this difference is that the sensing system EyeQ4 on Nio ES6 and Ideal One has been optimized for the road conditions in China, while Tesla’s algorithms lack sufficient “psychological preparation” for the common phenomenon of jaywalking pedestrians on Chinese roads.To solve this problem, Tesla announced early this month that it will form an Autopilot team in China. The most important task of this team is to optimize algorithms for China’s road environment.

According to feedback from many self-driving practitioners, the challenges facing algorithms in China’s road driving scenarios include, but are not limited to: inconsistent traffic signal lights in various cities, more commonly used traffic cones and stone pillars than in other countries, obstacles on the road such as logistics vehicles, express deliveries, elderly mobility scooters, and electric bikes that are three times as rich as in the United States, due to resource constraints and the impatience and rule-breaking behavior of drivers, sudden braking and aggressive overtaking are more common than in the West, more “Chinese characteristic” unconventional vehicles like construction trucks and modified cars due to frequent construction, and artificial traffic accidents such as insurance scams…

No matter which company’s self-driving vehicles are running on China’s roads, solving these problems in the perception stage makes it easier to avoid errors in the decision-planning stage.

As Dr. Guo Jishun said in his sharing in “Automotive Intelligence”: “If the perception module determines that the car ahead is an express electric vehicle, the decision module needs to lower the speed of the following car. At the same time, considering that the express vehicle may stop at any time, the decision module also needs to output a command to increase the following distance.”

Under the law of “scene determines the algorithm”, a team that has a deeper understanding of the scene than others, even if it starts late or its algorithm ability is not the best at the beginning, will establish a competitive barrier based on its understanding of the application scenario over time.

Based on the ADAS solution of Horizon Journey 2, Horizon specifically optimized it for China’s roads and scenes, such as special lane lines, countdown detection of traffic lights, and sudden oblique insertion of vehicles.

The result is that Horizon’s ADAS solution has significantly better performance than Mobileye EyeQ3 and Mobileye EyeQ4 in terms of lane line extension length, food delivery vehicles, pedestrian (including bicyclists) detection ability, etc.

There are also some extreme conditions that Mobileye rarely encounters during algorithm training in Europe and the United States: pedestrians blocked by vehicles showing only half of their body on densely parked city roadsides, raincoats unique to Chinese bicyclists, and frequent lane changes by drivers on highways, with only a small part of the vehicle exposed beside a car… For these “obstacles”, Horizon’s solution can accurately detect them.

Openness vs. Closedness

In addition to algorithms that are more compatible with China’s road scenes, another reason why car companies consider working with Horizon is their focus on “openness”. Or, in other words, they can’t stand Mobileye’s closedness.At a previous ADAS forum, several car manufacturer’s autonomous driving engineers complained that current sensor solutions come with pre-built ECU’s and algorithms. While this is convenient to use, it creates a “black box” situation where the car companies have no idea how the algorithm works or what its logic is and “they won’t give us permission”. The car companies can only continue to perform subsequent calculations based on these algorithms without being able to deduce its logical operations. This means that when the vehicle encounters a perception obstacle in an extreme scenario, the car company cannot independently find the problem and repair it. Additionally, the car companies cannot push for the evolution of the ADAS algorithm.

Mobileye’s software-hardware integrated solution that bundles chips and algorithms also has similar problems: while some users/car companies are ambitious, they cannot write their own algorithms. Users can also commission Mobileye to write custom algorithms for special driving scenarios, but the latter also requires a huge development fee. While EyeQ4’s algorithm is powerful enough to support lidar, it is not open-source, and the solution with lidar can only be designed by Mobileye (of course for a fee), thus very few of EyeQ4’s customers use lidar.

With the advantage of huge amounts of data, Mobileye’s algorithms are of course also rapidly progressing, however, the optimized algorithms usually only appear on the next generation of chips. Most chips that are already in production have been installed in customer vehicles and Mobileye will not do algorithm iterations for them.

If individual customers request it, Mobileye will do algorithm iterations through OTA for chips on relevant vehicle models, but extra charges are required.

The fundamental reason why Musk decided to abandon Mobileye’s solution was not because of the fatal accident, but because he believed that Mobileye’s closed system could not meet Tesla’s need for rapid iteration of autonomous driving ability.

Chinese car companies who want to control the development progress of autonomous driving technology are also undoubtedly plagued by the same problem.

Referring to the several rounds of games that Tesla played with Mobileye in 2015 to develop its own autonomous driving algorithm, in order to force car companies to form “path dependence” on their software-hardware integrated solutions, Mobileye will spare no effort to suppress attempts by car companies to develop their own algorithms.

Mobileye’s suppression of car companies’ ability to develop algorithms is first and foremost reflected in their control of data.In legal terms, the original data generated by Mobileye’s camera belongs to the automaker, but in practice, due to Mobileye’s strong desire for control, they restrict automakers from accessing this data through technical measures – the data is stored in Mobileye’s proprietary format and can only be accessed with their own toolchain, which automakers cannot obtain support for.

A traditional Chinese automaker collaborating with Mobileye needed to install an intelligent taillight and requested that Mobileye open up the perception data, but was rejected. Perhaps Mobileye’s mentality was like this: who knows what you’re going to do with this data?

What makes automakers even more speechless is that Mobileye does not even allow them to “privately” install “additional” cameras on the car to collect data (running in shadow mode).

Even if automakers only install a camera as a driving recorder, collect some road data for high-precision maps, or record some videos for accident playback or decision planning algorithms, Mobileye will become suspicious after seeing this.

Although they will not immediately prohibit automakers from doing so, they will continue to question, “What are you trying to do? Are you trying to develop your own algorithms to replace me?” Automakers need to explain repeatedly.

If Mobileye determines that the automaker has an idea of developing its own algorithms, even if it is just a misunderstanding, they will force the automaker to recall the vehicle and remove the “additional” camera. If automakers do not cooperate, Mobileye’s technical support for the mass-produced models of the automaker will be suspended.

In addition, Mobileye’s control over automakers extends to the product design stage.

Typically, Mobileye’s direct partners are Tier 1 companies designated by automakers. Although Mobileye is not directly involved in the selection of cameras, radars, ECUs, and circuit boards, Tier 1 companies need to submit them to Mobileye for review after selection. The review cycle usually lasts for 3-6 months, which makes the automakers particularly frustrated.

What’s even more outrageous is that for the same automaker, if they want to continue using Mobileye’s products on a new model, Mobileye will re-review it and charge hundreds of thousands to millions of dollars.

Unlike Mobileye’s closed mode, companies such as NVIDIA, Huawei, and Horizon in the autonomous driving chip business are relatively open in their business models, allowing users to write their own algorithms. Tesla and XPeng have chosen NVIDIA as their representative for “algorithm autonomy”.But as mentioned at the beginning of this article, Tesla had experienced a problem with the downgraded AEB function when switching from Mobileye’s EyeQ3 to NVIDIA’s Drive PX 2 at the end of 2016, where AEB would not function once the speed exceeded 28 mph. In the Autopilot 1.0 era, AEB could function as long as the speed did not exceed 85 mph. The reason for the AEB downgrade was that Tesla’s algorithm was not good enough at the time. Of course, this problem was resolved half a year later. This means that for car companies accustomed to Mobileye’s integrated software and hardware solution, it is actually very risky to switch to NVIDIA’s solution in a hasty manner when the algorithm capabilities are still immature and the experience is insufficient. The verification process of developing one’s own algorithm would be complex and the cycle would be long. Furthermore, if one’s algorithm doesn’t match well with NVIDIA’s chip, unexpected problems may arise that were not predicted in advance. However, this concern does not exist if the Horizon solution is used, because while providing the option for integrated software and hardware, Horizon will also help customers to master the algorithmic capability. Horizon will also choose to open up to customers in at least two levels: 1. open up the original data of the perception link, fully empower Tier 1 and car companies to do specific functions; 2. open up the lower-level toolchain, and provide a rich sample library of software models and algorithm models on top of this toolchain, customers can iterate according to their own scenario data, and even develop their own unique algorithm model based on this. In 2019, the solution built on the Journey 2 was deployed on 1,000 buses for a certain Korean customer. The recognition function for traffic signs written in Korean was developed by the client based on the toolchain provided by Horizon. In China, Horizon, in collaboration with Changan, has established the Changan-Horizon Artificial Intelligence Joint Laboratory. The intelligent cockpit (equipped with Journey 2) project of UNI-T that is more familiar to everyone is the first major achievement of this laboratory. In this joint laboratory, Horizon provides technical guidance to Changan to assist Changan’s algorithm engineers in developing their own algorithms. In the ADAS projects that Horizon is cooperating with several host factories, it also plays a similar role. Yu Kai said: “We are not delivering algorithms, but serving as consultants to the host factories to help them develop their own algorithms. In fact, sometimes the technological know-how is just a matter of poking a layer of window paper. The car companies also have a lot of very smart people.”In November last year, Horizon Robotics established a partnership with domestic ADAS solution provider, Forstar Technologies. Unlike international Tier 1 suppliers that only offer a black box solution, domestic Tier 1 suppliers such as Forstar Technologies are open to collaborating with automakers, with algorithms capable of being led by automakers or developed jointly. Moreover, these domestic Tier 1 suppliers can also participate in the automaker’s vehicle design process, jointly defining the electronic and electrical architecture to ensure that the vehicle’s electronic and electrical architecture can indeed meet the requirements of autonomous driving.

Over the next year, many mass-produced vehicle models equipped with ADAS functions based on Horizon 2 will be launched. These customers include both private and state-owned enterprises.

Recently, the plan by Mobileye to carry out Robotaxi operations has drawn the attention of many people. The author once asked Yu Kai: “What kind of inspiration does this decision by Mobileye offer to other chip suppliers?” To this, Yu Kai’s response was: “Horizon Robotics will not do Robotaxi. We will only empower the underlying technology in the era of robotics.”

Paying more attention to Chinese customers with a shorter service process

“Do you have the support of the chip manufacturer?” This is a very important question in any industry that uses chips. Unfortunately, Chinese automakers generally reflect that regardless of whether the ADAS supplier is Mobileye or another company, they find it difficult to obtain “original factory support.”

As a chief engineer of a certain automaker’s research institute said: “The functions of many overseas manufacturers’ products are all reserved for Chinese automakers.”

A more common situation is that these suppliers do not specifically say they do not support Chinese automakers, but in practice, their latest technologies are certainly prioritized to support European and American automakers, rather than Chinese automakers.

“A founder of a domestic ADAS company said: we used some European and Japanese chips before, but when it came to technical support provided by the manufacturer, we could only rank second, which resulted in our batch application time falling behind that of European and Japanese companies”.

Another fact that leaves Chinese automakers helpless is that Mobileye does not have a technical support team for the front-loading market in China. If automakers encounter problems with the product in use, Mobileye needs to send people from Israel to China to solve the problem, or Chinese companies need to send people to Israel. Either way, the time cost would be very high.

In addition, the daily service needs of automakers are also restricted by the culture of international and foreign personnel not working overtime on weekends. An engineer of a certain automaker complained: “We received user complaints and were very anxious. We were willing to work overtime on weekends to solve the problem, but they (suppliers) would rest and go on vacation on weekends.”

As a local chip “original factory,” Horizon Robotics prioritizes the service needs of Chinese automakers and local Tier 1 suppliers with higher efficiency as their natural advantage.As a startup company, Horizon has fewer internal levels and simpler and more efficient processes. “There is no problem of having to go through several layers to find the person in charge. When a few presidents encounter a problem, they simply find Yu Kai, and I directly contact the compiler development team for direct support,” Yu Kai said at a roundtable forum at the end of last year.

According to Yu Kai, European partners have already been working with them to collect data locally. Some partners have suggested that Horizon “should not only focus on the Chinese market, but also the global market”, but that is another story.

If you have a different understanding of this topic or have other experiences to share about unmanned driving, you are welcome to add the author’s WeChat charitableman for communication.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.