A few days ago, Jianyue Car Review unveiled Ideal Auto’s development roadmap for autonomous driving in an interview with Li Xiang. Many details were also mentioned in Li Xiang’s communication with Garage 42.

Previously, we wrote about Li Xiang’s logic of creating Ideal ONE as a popular product, but did not mention that another important goal of Ideal Auto is to obtain a ticket to the autonomous driving race track in 2025. This concept was already mentioned by Li Xiang in Autohome’s live broadcast earlier.

Combining our exchanges with autonomous driving companies and car manufacturers in the industry, as well as the promotion of autonomous driving assisted vehicles on the market, it can be seen that the development direction of autonomous driving has already taken two paths.

Two paths of autonomous driving

Path one

From Jianyue Car Review’s interview, it can be learned that Ideal Auto’s roadmap for autonomous driving evolution is based on current ADAS and will be developed to NoA from 2021 to 2022, add high-precision maps from 2023 to 2024, and achieve final FSD.

First, let’s briefly explain the three proprietary terms that came out of nowhere:

ADAS: Advanced Driving Assistance System, which can achieve automatic follow-up and LCC lane centering functions. On some road sections with clear lane lines, the vehicle can actively adjust the speed and keep the vehicle in the center of the lane.

NoA: Navigate on Autopilot. Based on L2 level assisted driving, the vehicle can automatically change lanes and switch speeds according to the navigation segment set by the vehicle’s machine.

FSD: Full Self-Driving, automatic driving.

I believe that many of the friends present are already familiar with these three terms, because Tesla announced these functions as early as 4 years ago.

In 2014, Tesla launched the first generation of hardware Autopilot, which can achieve basic ADAS functions and automatic turn signal function.

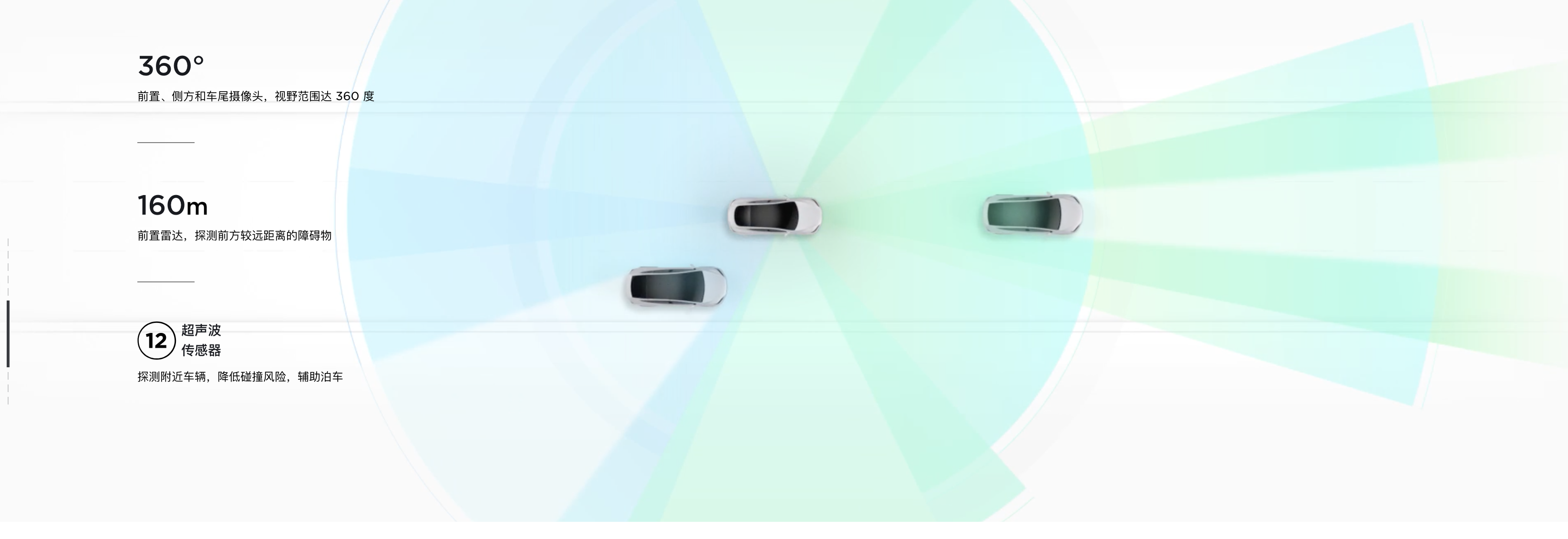

In 2016, the second generation of hardware was released, with the number of cameras increased from 1 to 8, achieving 360-degree visual perception. At the same time, the chip calculation power was greatly improved, and NoA automatic assisted navigation driving was first released, and the concept of FSD was proposed.

In 2019, the third generation hardware was released, with unchanged sensing hardware architecture, upgraded autonomous driving chips, and increased computing power. On the functional level, the “recognize traffic lights, parking signs and automatic braking functions” were introduced to prepare for realizing NoA on urban roads.

Therefore, regarding the road to achieving autonomous driving, Ideal’s planned functions are in line with those of Tesla, or rather, Ideal has chosen the path that Tesla has already tried and tested.

The Two Paths of Autonomous Driving: Tesla vs Traditional Automakers

There are three emerging automakers in China that are taking the autonomous driving route: Tesla, NIO, and XPeng.

On April 1st of this year, NIO announced that the Navigate on Pilot self-driving feature would be pushed to all users who purchased the optional NIO Pilot by the end of the year.

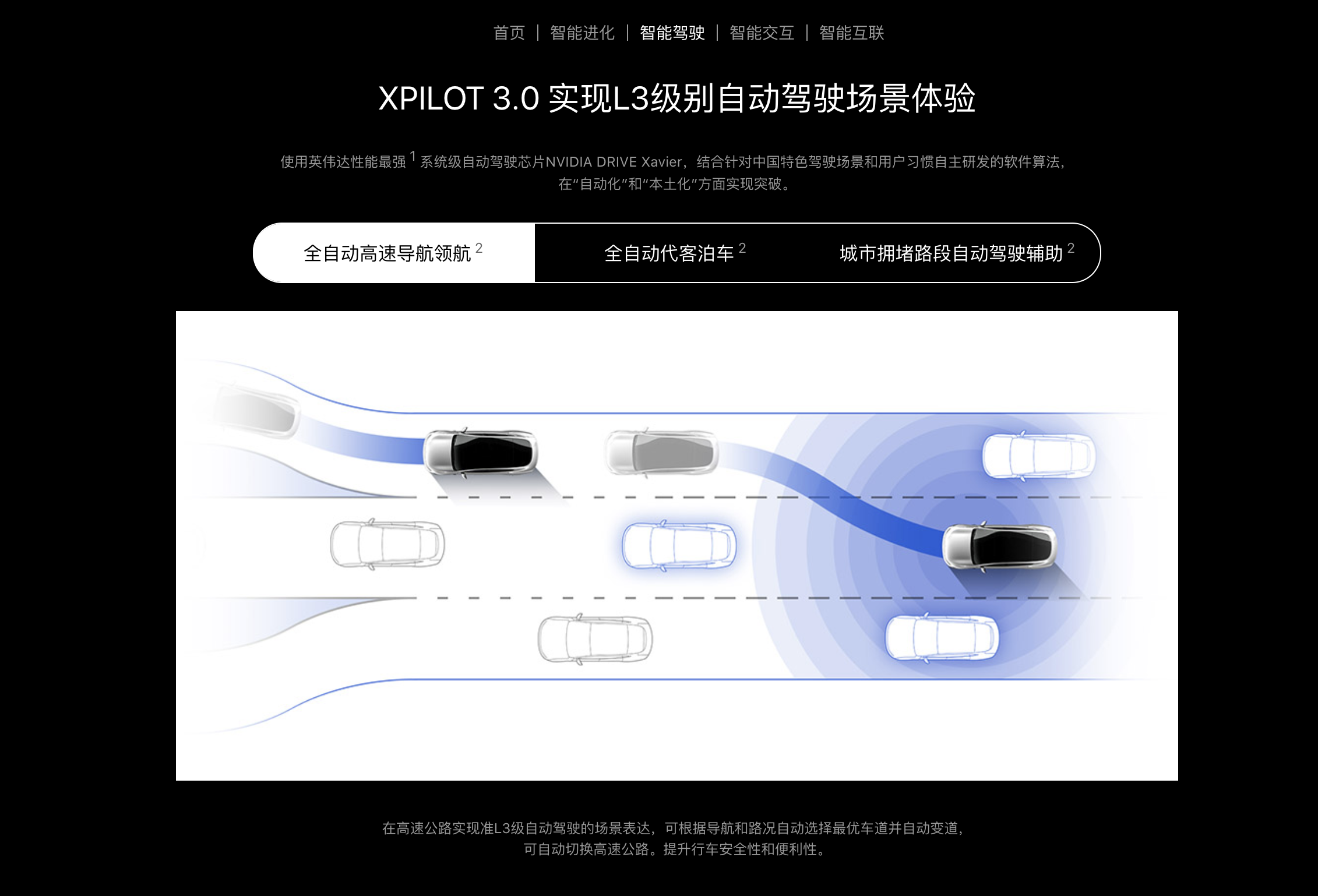

On April 27th, XPeng announced at their P7 launch event that their XPilot 3.0 software upgrade will enable NGP (Next Generation Platform) freeway autonomy and urban congestion autonomy.

From a high-level perspective, these three Chinese automakers are heading in the same direction with their autonomous driving pursuits.

This brings us to the two main paths of autonomous driving. The first is led by Tesla, which developed their own ADAS (Advanced Driver Assistance System) that includes the following features: High-Speed NoA (Navigate on Autopilot) and City NoA (Navigate on Autopilot), Full Self-Driving (FSD) functionality.

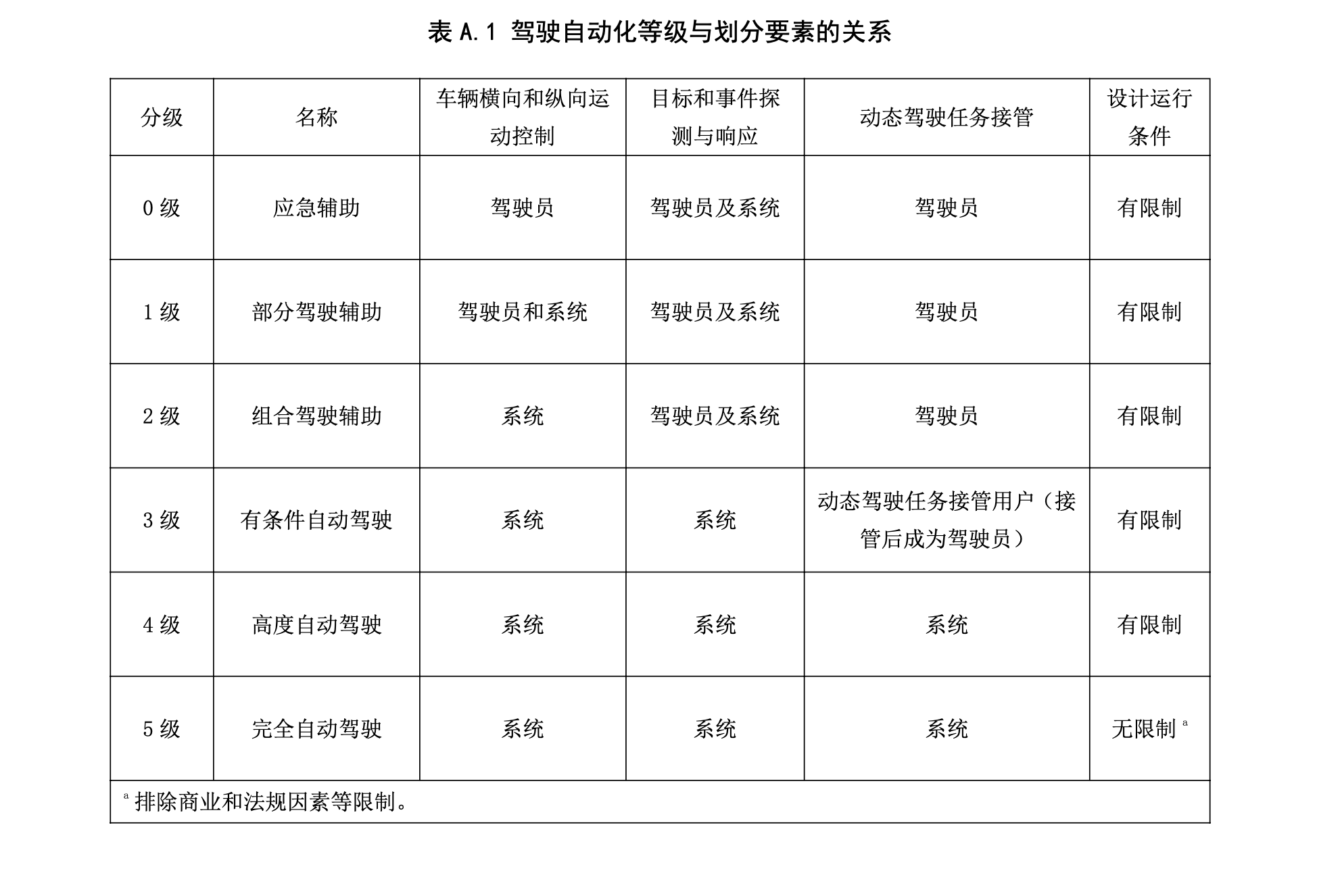

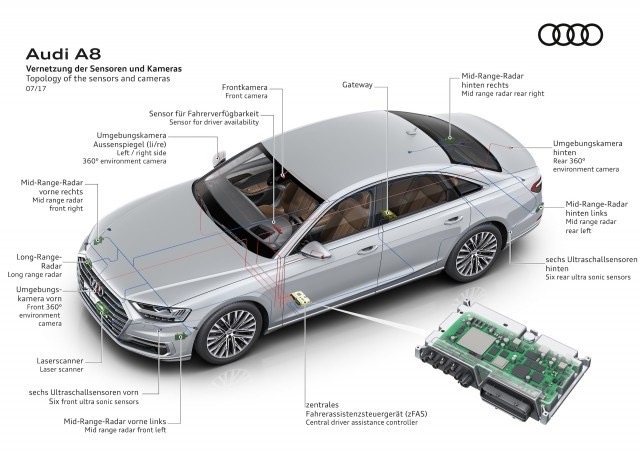

The second path is dominated by traditional automakers who follow SAE (Society of Automotive Engineers) and MIIT (Ministry of Industry and Information Technology) standards for autonomous driving. Audi was the first in this group to offer L3 (Level 3) self-driving with their fifth generation A8, which were equipped with LIDAR and other advanced sensors.

SAE defines L3 as “conditional automation,” meaning that the vehicle takes control of both the lateral and longitudinal movements, and can detect and respond to its surroundings. In other words, drivers can take their hands, feet, and eyes off the wheel, and rely on the system to handle everything, only intervening if necessary.

Now that we understand the different levels of autonomy, let’s take a closer look at what A8’s L3 system can do.According to the official introduction, after enabling Audi AI function, the driver can take their hands off the steering wheel and perform some non-driving tasks. However, this function is limited to “high-speed” + “vehicle speed below 60 km/h” road conditions.

In 2017, Audi released L3 level autonomous driving. In 2020, L3 is becoming more frequently seen.

On March 10 of this year, Changan Automobile released a video called “L3 Level Production Autonomous Driving Experience” and mentioned “liberating users’ hands, feet, and eyes to achieve true L3 level autonomous driving.”

On June 3 of this year, GAC New Energy released a video titled “Aion LX L3 Autonomous Driving,” which also mentioned keywords such as “hands-free,” “feet-free,” and “autonomous driving.”

It can be seen that on this route, more emphasis is placed on the grading of autonomous driving, the degree of driver intervention, and the applicable scenarios, which are being weakened. On the other hand, new car-making forces tend to emphasize the scenarios for “automatic/assisted driving,” rather than the grading and degree of driver intervention.

Based on my personal experience of nearly 20,000 kilometers of assisted driving, at this stage, developing autonomous driving functions based on applicable scenarios provides a better experience than developing autonomous driving based on SAE standards.

The American SAE standard and the MIIT’s “Automobile Driving Automation Grading” want to clearly delineate the relationship between “vehicle” and “person.” The advantage lies in clarifying the responsibility subject in different scenarios, but the product is relatively deformed.

Like the Audi A8, which can achieve L3 level autonomous driving on high-speed roads (without pedestrians, without traffic lights) with vehicle speed of less than 60 km/h, the applicable scenarios are very limited.

The GAC Aion LX can achieve hands-free and feet-free from 0-120 km/h (strictly speaking, it is not L3 level autonomous driving as it does not achieve eye-free), but if encountering slow vehicles or needing to switch to high-speed, the driver still needs to intervene, and the level of “automation” is not high enough.

Of course, Tesla’s route also has its own problems. With more and more functions, the number of times the driver needs to take over is decreasing, but the responsibility subject is still the driver. So, although the function is useful, it still requires focused attention to use it “correctly.”Back to the purpose of developing autonomous driving, the core goal of autonomous driving is to reduce the involvement of drivers and make driving easier for them. Therefore, we hope that more car companies can develop autonomous driving based on “applicable scenarios”, and not be trapped in the framework of L2 and L3.

After clarifying the route we have identified, let’s talk about the second topic.

Do new car makers have the opportunity to surpass Tesla?

It’s difficult, but there is hope.

The challenge lies in the lagging hardware and algorithms, while the hope lies in Chinese car companies having a deeper understanding of Chinese people’s needs.

Hardware and Algorithms

Let’s talk about the hardware first. Starting from Hardware 2.0, Tesla has installed 8 cameras as the vehicle’s main perception hardware throughout the entire car. Similarly, XPeng P7 also uses a visual perception solution and has laid out 13 cameras (8 working simultaneously) throughout the entire car. The next generation of Ideal’s car model, X01 (internal code name), will also carry 8 cameras as the main perception component.

Once this technology route is clear, the development direction is also clear.

Stacking perception hardware is relatively simple. Behind the rich and complex perception hardware requires a powerful auxiliary driving chip and intelligent algorithms to make good use of this data.

According to Lang Xianpeng, the general manager of Ideal’s autonomous driving business, with 8 cameras of 2 million pixels, capturing images at 30 frames per second, and multiplying the calculations required by each pixel of a typical network, at least 200 Tops of computing power is needed to truly achieve FSD.

However, there is currently no mass-produced autonomous driving chip with computing power exceeding 200 Tops on the market. Therefore, in order to meet the intelligent needs of a single car in the future, the demand for chip computing power will continue to increase.

More importantly, while improving chip computing power, it is necessary to meet the requirements of energy consumption and production costs for vehicle-end.

For chip manufacturers, they face not only technological bottlenecks but also sales bottlenecks. Only with sufficient production capacity can the overall manufacturing cost be lowered, and with a lower price, it is possible to be put on cheaper cars with larger sales. The relationship between the two is like an inverse proportion function.

Manufacturers must be confident about the future in order to make products that may not generate huge profits in the short term. This is one of Tesla’s advantages and the huge challenge of going the visual route.

In the face of this market situation, Tesla did not stop and wait for the market to provide a solution that can meet the demand, but chose to independently develop a high-computing-power chip.Hardware 3.0 is currently the most powerful and cost-effective chip among mass-produced chips on the market, with a computing power of only 72 Tops (total computing power of 144 Tops, with an additional 72 Tops for redundancy). Although there is still a gap from the ideal target of achieving 200 Tops for autonomous driving, Tesla has taken the lead and can guarantee meeting the demand for the next one million units.

Of course, in terms of hardware layout, new automakers have made some adjustments based on their own understanding. Both XPeng P7 and Li Xiang ONE will add five millimeter wave radars on top of their cameras, which is four more than Tesla’s current architecture, achieving dual 360-degree perception of camera and millimeter wave radar, making up for the physical defects of the camera in heavy rain and fog, while also providing perception redundancy.

In addition, XPeng P7 has also deployed high-precision positioning hardware in the vehicle, and used high-precision maps provided by AutoNavi, while Li Xiang ONE will also be equipped with high-precision maps as an additional “information” source.

Next is the algorithm. At the Autonomy Day in February of this year, Tesla’s AI director revealed that Tesla has accumulated more than 3 billion miles of Autopilot driving data. A large amount of data is very efficient for companies to optimize their algorithms. At present, Tesla’s accumulated road data is unattainable by all other companies, so Tesla has an absolute advantage in the visual recognition algorithm of autonomous driving.

From the results, Tesla launched the NoA function in the United States in 2016, and in China in May 2019, while no domestic automaker has yet launched this function. According to the timetable given, the earliest implementation would be at the end of this year, so from a functional perspective, Tesla is at least 2-4 years ahead.

The hope of Chinese automakers

The traffic regulations and local driving habits in the traffic environment are highly localized. After all, Tesla is still an American company. Simply transplanting the working logic of the American Autopilot to China is definitely not applicable.

From the experience of automatic parking, both the recognition of parking spaces and the positioning after parking are not as good as those of the Chinese domestic brand XPeng Motors.From the experience of ADAS, when the follow distance is set to the closest level, the distance between Tesla and the preceding vehicle is still as much as 5 meters, making it very vulnerable to being cut off, while NIO can maintain a distance of 3.3 meters.

From the experience of NoA, the system defaults to the leftmost lane as the passing lane, so even if the vehicle speed is set to 120 km/h, the system will automatically keep the vehicle in the second left lane, and only merge into the leftmost lane when passing.

This logic is not a big problem on American highways, but the experience of using NoA is extremely poor on congested or two-way, only four-lane highways in China.

Therefore, even though Tesla’s capabilities are very strong, differences in working logic standards can still result in a missing user experience. Moreover, as the degree of automation continues to increase, there will be more and more scenarios that require vehicle takeover, which will require Tesla to adjust its product accordingly.

In order to optimize the user experience for China’s road scenarios and regulations, Tao Lin, the Vice President of External Affairs for Tesla, revealed that Tesla may increase its autonomous driving research and development team for the Chinese market and form a dedicated autonomous driving team for China.

Having a specialized AI Autopilot team for the Chinese market will help FSD adapt to the differences between American and Chinese driving, improving Tesla’s ability to achieve “complete autonomous driving” in China.

For Chinese car companies, they may be somewhat behind Tesla in hardware and algorithms when it comes to achieving FSD, but they also have a window of opportunity. The outcome will ultimately depend on the efforts made by Chinese car makers themselves.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.