Tesla Autopilot has not been updated for a long time.

With the massive push of Tesla version 2018.42.2 on October 27, 2018, the elite force of Autopilot software team has focused all their efforts on developing Enhanced Summon function.

Nine months have passed, but the daring and efficient Autopilot team has yet to get Enhanced Summon to work.

On April 6, 2019, Elon Musk announced on Twitter that Enhanced Summon will be massively pushed a week later. However, the actual performance of Autopilot showed that the version of Enhanced Summon was not as impressive as Elon claimed it would be.

During Tesla’s Investor Day on April 23, Elon inadvertently revealed that the latest version of Enhanced Summon is still in internal testing and is not yet suitable for mass deployment to users.

In other words, the ideal Enhanced Summon is still not available, and the latest release date has been set for “around August 16.”

What’s going on with Tesla Autopilot? Today, I will try to explain from three aspects: organizational structure, AI & software, and hardware, why Autopilot has reached a turning point, and why Elon Musk is pushing forward the final war of Autopilot.

Achieving Autonomous Driving in 5 Months

Elon often chooses to drive his Tesla from his home in LA Brentwood to the Tesla Design Center in Hawthorne, and he has a Model S and a Model 3. Both cars have developer versions of Autopilot, but they are from two different branches: one supports Enhanced Summon, and the other supports Full Self-Driving.

On his daily commute, he tests these features and provides direct feedback to the Autopilot team.

Eight months later, Elon resumed talking about Autopilot.

“The two biggest software challenges are intersections with complex traffic lights and parking lots with shopping centers.” Most of the development team’s efforts are focused on these scenarios, but achieving 99.9999% safety will require a lot of effort.

Parking (Summon) is a very tricky problem. There will be a deep engineering review of “Summon” later today.

Answering another netizen’s question three days later, Elon revealed that “Summon” will be widely pushed around August 16th. The Autopilot team has overcome many complex challenges. Elon’s evaluation of the new version of “Summon” is Magical.

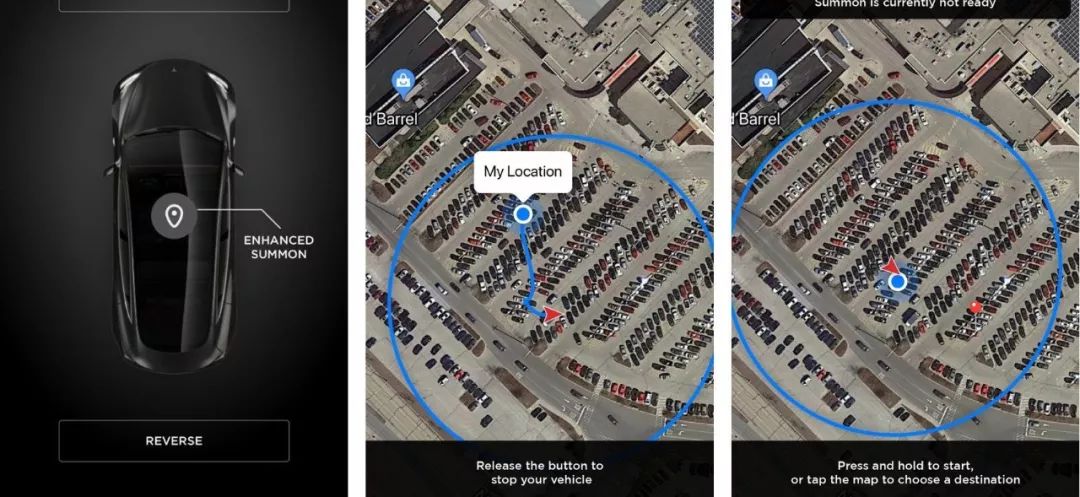

The definition of “Summon” is to respond to the driver’s mobile app call from any parking space and automatically drive to the driver’s location. It doesn’t seem that difficult, so why did the development progress of Autopilot stall at one point?

“The biggest software challenge” is a vague term. Specifically, is it a challenge to perception, decision-making, or control?

Since October 2015, Elon has been personally interviewing and directly leading the Autopilot team. Today, the Tesla Autopilot team has about 200 people in total.

Autopilot Hardware Vice President Pete Bannon leads a hardware team of about 70 people and is responsible for the independent development of Tesla’s AI chips and millimeter-wave radar.

Autopilot Engineering Vice President Stuart Bowers leads Autopilot’s largest team, numbering around 100 people, responsible for advancing map, quality control, simulation, and firmware updates.

# Autopilot Vision & Tesla AI Senior Director Andrej Karpathy leads the smallest but most critical team responsible for exploring the boundaries of Tesla’s computer vision and artificial intelligence technology. This approximately 35-person team involves technologies such as self-supervised learning, imitation learning, and reinforcement learning, making it one of the top artificial intelligence application research teams in Silicon Valley and even the world.

# Autopilot Vision & Tesla AI Senior Director Andrej Karpathy leads the smallest but most critical team responsible for exploring the boundaries of Tesla’s computer vision and artificial intelligence technology. This approximately 35-person team involves technologies such as self-supervised learning, imitation learning, and reinforcement learning, making it one of the top artificial intelligence application research teams in Silicon Valley and even the world.

The stable relationship among Pete, Stuart, and Karpathy, who report directly to Elon, has lasted for nearly a year (which is very rare at Tesla), until after Tesla’s Investor Day on April 23, when things began to change.

At the Investor Day, Elon released a Tesla’s ten-year to-do list. The left-hand side was already achieved milestones, and on the right-hand side, it was explicitly written that by 2019, within the next five months, Tesla would achieve complete autonomous driving features.

This time, there were no visual perception limitations that were not open-sourced by Mobileye and no computing constraints of Autopilot 2.+. In the past three years, Elon Musk has effortlessly removed all physical limitations on the road to autonomous driving. Now it’s up to the Autopilot team to step up.

Achieving autonomous driving within five months is a harsh and cruel timetable, but the R&D work has already begun. In media reports, it has been said that Elon and the Autopilot team had intense conflicts. In fact, there were only two choices: achieve autonomous driving in five months or leave.

On May 10, the software engineering team led by Stuart was the first to be hit. Five engineers left in succession:

- Nenad Uzunovic, Enhance Summoning Technology Lead

- Zeljko Popovic, Perception Lead

- Drew Steedly, Chief Perception Engineer

- Frank Havlak, Control and Path Planning Senior Engineer* Simulation team senior engineer Ben Goldstein

A bigger storm is brewing within Autopilot. Stuart was demoted and Ashok Elluswamy was promoted as head of perception and computer vision team, CJ Moore became responsible for Autopilot simulation and quality control while Drew Baglino became the responsible person for path planning. All three of them now report directly to Elon.

That is to say, in addition to the five core executives (CEO, CFO, CTO, Chief Designer, and President of Automotive), there are now three new Autopilot team executives reporting directly to Elon in addition to the 22 vice presidents scattered around the world. The new organizational structure is as follows:

- Autopilot Hardware VP Pete Bannon

- Autopilot Engineering VP Stuart Bowers

- Autopilot Vision Senior Director Andrej Karpathy

- Autopilot Perception & CV Head Ashok Elluswamy

- Autopilot Path Planning Head Drew Baglino

- Autopilot Simulation Head CJ Moore

As mentioned above, Autopilot currently has the highest number of executives reporting directly to Elon, totaling six, among all Tesla departments.

Among the departing executives, there are many founding members of Autopilot who have served for more than five years. In the past five years, they have gone through iterations of Autopilot 1.0 to 3.0, experienced “Elon being tough, software VPs being replaced frequently,” so why did they resign today?

In the past, no matter how harsh the schedule was, Elon’s tasks were nothing more than “developing and testing automatic lane change in three months” and “replacing Mobileye with self-developed vision tools in six months”. Although these tasks were all world-class challenges, full effort and extension of deadlines brought time tolerance, which could eventually be resolved.

Today, they received the task of “achieving automatic driving in five months.”

Since Google X Lab developed an autonomous vehicle in 2009, the autonomous driving field has poured billions of dollars into research and development. Countless giants, universities and research institutions have invested heavily in talent development. However, until today, autonomous driving is still a wilderness, a vast and boundless technology vacuum.In the past five years, Tesla has been facing various physical limitations in suppliers, vehicle regulations, computing power, etc., and lacks the realistic conditions to enter the field of autonomous driving. Today, Elon Musk has begun to vigorously promote the development of autonomous driving.

Autopilot Vision is a turning point.

How Autopilot Vision is Made

In July 2016, Andrej Karpathy joined Tesla as the Director of Autopilot Vision & AI. While the Autopilot department was undergoing drastic personnel changes, the 35-person AI department remained solid as a rock, and Karpathy’s work was indispensable.

Before joining Tesla, Karpathy conducted research in the field of AI at Stanford University’s AI Lab, Google, and Open AI. The AI technologies applied by Tesla Autopilot include self-supervised learning, imitation learning, and reinforcement learning.

On Tesla’s official website, we see that “Tesla Vision is based on deep neural networks and is capable of professional analysis of driving environments, with higher reliability than traditional visual processing techniques.”

But how does Tesla apply AI to drive Autopilot towards the road of autonomous driving? We need more information.

First, we need to understand that the so-called Tesla Vision is an end-to-end deep neural network consisting of multiple deep neural networks with different roles.

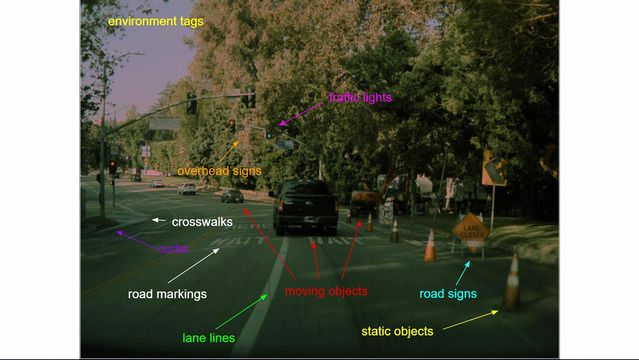

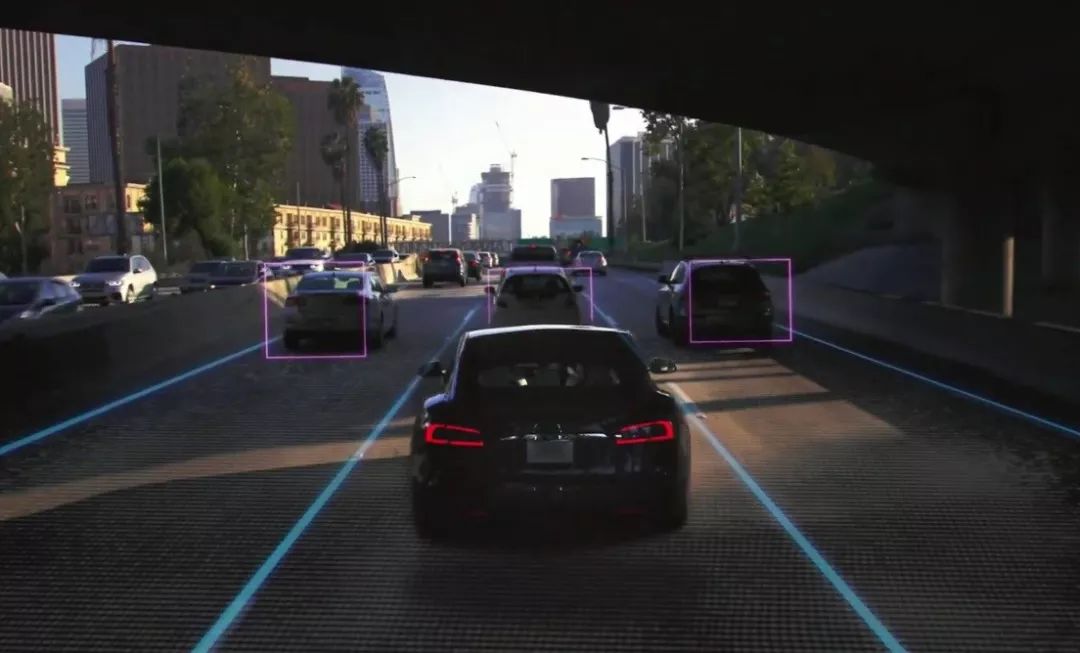

First is “object detection and classification,” including obstacle detection and recognition of traffic signals and road signs.

- DriveNet: Perceive other vehicles, pedestrians, traffic lights (without distinguishing their states), and road signs on the road.

- LightNet: Classify the states of traffic lights: red, yellow, or green.

- SignNet: Recognize the type of road signs, such as parking/ speed limit/ one-way street, etc.

- WaitNet: Detect and recognize situations where vehicles must stop and wait, such as intersections or large parking lots.

In the “object detection and classification” section, Karpathy advocates using “self-supervised learning” to quickly improve the ability of Tesla Vision.

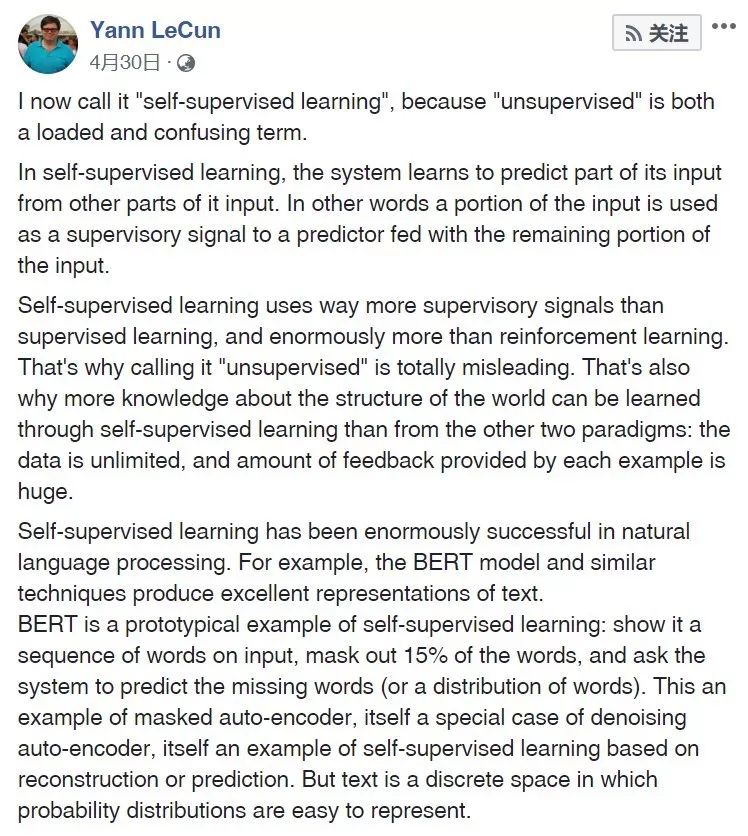

Self-supervised learning is a very popular subfield in the field of deep learning. On April 30th, one of the three AI pioneers and Facebook’s Chief AI Scientist, Yann LeCun, wrote an article specifically discussing the current state of self-supervised learning in the natural language processing field, which has achieved huge success. However, it still cannot work well in the field of images or videos, which, in his view, will be the greatest challenge in ML and AI in the next few years.

“Applying self-supervised learning to the field of images or videos is what Karpathy did, except that the time frame of “the next few years” has been shortened to 5 months.”

What is self-supervised learning? Self-supervised learning learns distinguishable visual features by designing auxiliary tasks. As a result, the target label can be directly obtained from training data or images, providing supervisory information for the training of computer vision models.

“The biggest advantage of self-supervised learning is that it eliminates the prerequisite for supervised learning, which requires human data annotation, by extracting and using relevant metadata before and after natural scenes as supervisory signals.”

Take autonomous driving as an example. As of July 5th, Tesla Autopilot has accumulated more than 1.55 billion miles globally. It is impossible to clean, label, train, and improve deep neural networks for such an astronomical data set in the short-term.

Self-supervised learning solves this problem well. To some extent, it allows deep neural networks to embark on the path of self-improvement. All you need to do is use the data collected by a global fleet of 500,000 vehicles for training, and it will become stronger and stronger.

Once the initial perception is completed, the next step is path planning. At the level of path planning, similarly, multiple layers of deep neural networks are needed to complete the perception of the environment. This includes planning the road ahead of the vehicle.

- OpenRoadNet: identify all drivable spaces around the vehicle, including the current lane and adjacent lanes.- PathNet: Highlights the feasible path of a vehicle without lane lines

- LaneNet: Detects lane lines and other markers that define the driving path

- MapNet: Identifies lanes and landmarks that can be used to create and update high-precision maps

Path planning also faces some extremely complex challenges. For example, in road conditions with unclear or even absent lane lines (of course, you may have noticed PathNet above), how can these problems be solved? Karpathy’s solution is: Autopilot should do things the way humans do.

Imitation learning is a popular research method in the field of deep learning. In February 2019, Waymo Chief Scientist Drago Anguelov gave a speech at MIT. Drago revealed that with the help of “imitation learning,” Waymo’s self-driving cars are improving their driving ability by learning human driving behavior.

The solution used by Tesla is called “behavior cloning” (a type of imitation learning). What does behavior cloning mean? This is relatively easy to understand. We humans learn new skills by observing how others do it and completing the learning process by imitation.

So we just source a lot of this from the fleet, we train a neural network on those trajectories, and then the neural network predicts paths just from that data. So, really what this is referred to typically is called imitation learning.

We’re taking human trajectories from the real world and we’re just trying to imitate how people drive in real worlds.I’ve mentioned earlier that intersections with complex traffic lights are the most challenging scenario for autonomous vehicles. In such cases, how should the system make decisions?

Each Tesla car passing through this intersection, with or without Autopilot enabled, generates image data from eight cameras. When enough image data is accumulated, a deep neural network extracts the highest common element from the driving decisions made by human drivers at this intersection, including the vehicle’s position, speed, turning angle, braking force, and so on, to learn from.

When another Tesla passes through this intersection with Autopilot enabled, it mimics safe driving behavior of human drivers to make decisions.

Moreover, when encountering similar intersections in other areas, cities, or even countries, the deep neural network retrieves safe driving behaviors of drivers to match the encountered situations and achieve transfer learning ability.

We have discussed self-supervised learning and imitation learning, but there are still many difficult challenges that need to be tackled to enable automatic driving for Teslas all over the world. What is the biggest challenge?

More and more top talents, such as Chris Urmson, the former Waymo CTO; Wang Gang, the Ali Autonomous Driving Chief Scientist; and Anthony Levandowski, the former Uber Autonomous Driving Vice President, publicly expressed that understanding human intent is the fundamental challenge for autonomous vehicles.

Tesla’s “Smart Summon” has been in development for 9 months. As mentioned above, as long as imitation learning is applied to constantly imitate the behavior of human drivers, Teslas can automatically drive out of parking lots and come to the driver. Then why is this feature delayed in launching?

Because there are too many uncertainties in the road conditions, other vehicles, and the directions and intentions of pedestrians every time the vehicle drives out of a parking space and then out of the parking lot.

At this time, Karpathy’s expertise in reinforcement learning comes in handy.

Reinforcement learning uses a more macroscopic global perspective to approach autonomous driving and solve problems.

Reinforcement learning refers to the use of unmarked data (similar to self-supervised learning), but the agent can know whether it is getting closer or further from autonomous driving through a certain method (i.e., reward/punishment function). The reward/punishment function can be imagined as the delayed and sparse form of automated driving.In self-supervised learning, the output corresponding to each input can be obtained directly, whereas in reinforcement learning, a deep neural network needs to be trained for a period of time to receive delayed feedback, with only some hints indicating whether one is getting closer or farther away from self-driving.

Here, I would like to use an example outside of autonomous driving to illustrate the point. In January 2019, after two years of development, DeepMind’s AlphaStar defeated one of the world’s most powerful professional StarCraft players – Dario Wünsch and MaNa team – with an absolute 5:0 advantage, conquering the most complex game ever created by humans.

StarCraft has the following five characteristics:

- No best strategy (the game process varies a lot)

- Incomplete information (unable to see global information)

- Long-term planning (cause and effect are not immediately obvious)

- Real-time (it must constantly perceive, decide and execute as time goes by)

- Large activity space (hundreds of different units and buildings)

Does that sound familiar? The above five characteristics are highly relevant to the challenges faced by autonomous driving vehicles.

AlphaStar’s deep neural network is trained on StarCraft raw game data using supervised learning and reinforcement learning.

Similarly, AlphaStar and Autopilot have a high degree of similarity in terms of their basic AI implementation path, scenarios and problems encountered. However, the difference is that AlphaStar’s task is to defeat humans, while Autopilot not only needs to defeat humans, but also reduce the accident rate to 99.9999%.

A better tomorrow

Undoubtedly, this AI-driven complex system is the most exciting application of artificial intelligence in the vertical field of the automotive industry. So, will Tesla Autopilot succeed?

First, let’s talk about the differentiated advantages of Elon compared to other automotive entrepreneurs.

As early as 2015, Elon, together with Sam Altman, invested $1 billion to establish OpenAI, the world’s top non-profit artificial intelligence research institution. Although Elon has long since exited the OpenAI board, the CTO and chief scientist of OpenAI are still his friends. In addition, DeepMind CEO Demis Hassabis and many other technical executives are also familiar with Elon.

In June 2017, a discussion was held at the Asilomar Conference on AI. Nine of the ten guests on stage were AI scientists from Berkeley, New York, Cornell and other top research institutions, and the only entrepreneur present was Elon.

What I mean is that Elon is the only car entrepreneur who truly understands AI and has managed to infiltrate the front lines of the AI industry with his professional knowledge. This kind of advantage is unparalleled when it comes to Tesla’s recruitment of AI talent.

This is just the tip of the iceberg for Tesla’s Autopilot. Tesla’s unique and huge advantages in chip technology, perception, decision-making, control autonomy, global fleet vertical integration, and AI are already beginning to show.

Why has the autonomous driving industry quickly entered into a time of alliance division with the partnership of Volkswagen and Ford, and Mercedes-Benz and BMW? The CEOs of those four companies combined do not even have the same level of appeal to talent as Elon.

This is a real problem. Just like when you graduate from Stanford, do you want to go to the NIO North America Autonomous Driving R&D Center or go to BAIC to work on integrating supplier-assisted driving systems?

Elon dared to say “I’m not overconfident, but no car company can match Tesla (Autopilot)” for a reason.

The second reason why we should have high expectations for Tesla Autopilot is because Tesla is extremely radical.

At Tesla’s Autonomy Investor Day, Stuart addressed a group of dozens of shareholders and said:

When we initially have some algorithms we want to try out, we can put them on the fleet, and we can see what they would have done in a real world scenario…

NoA rushed out of the ramp at a speed of 100 km/h, and a certain version of Autopilot suddenly hesitated when changing lanes… all of which are products of algorithm validation.

The result behind extreme aggressiveness is rapid trial and error, rapid improvement. No car company will enhance the system’s capability in the form of global user verification.

You might ask, since Tesla is so capable, why has “Enhanced Summon” been delayed for so long? In addition to the fact that “Enhanced Summon” is one of the most complicated scenarios in the process of landing automatic driving, Elon’s words are a good answer.

When we release something, we’re releasing it to 500,000 cars and all over the world. And so it has to be a general solution. So our progress may appear slower than it actually is relative to others that are developing self-driving technology.

But in fact, it is quite a lot more advanced because any element that we release is a general solution.

When we push out updates, we push them to 500,000 cars all over the world. So they have to be a universal solution. Therefore, our progress may appear slower than that of competitors who are developing self-driving technology.

But in fact, it is very advanced. Because any element that we release is a global universal solution.

This is why, “I’m not too confident, but no car company is Tesla’s match.“

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.