On May 16, 2019, Nissan held a press conference for the release of its next-generation assisted driving system, ProPilot 2.0, at its headquarters in Yokohama, Japan. Subsequently, Reuters published a commentary article titled “Standing Radar: Nissan’s Attitude Toward Lidar is Cold, Consistent with Tesla”.

Upon further investigation, we believe that there are significant differences in the technical architecture between Nissan’s ProPilot 2.0 and Tesla’s Autopilot. However, from Nissan’s press conference, ProPilot 2.0 already has significant functionality, and another camp represented by ProPilot 2.0 may become a strong competitor to Tesla’s Autopilot in the future.

Taking Sides with Tesla?

Tetsuya Iijima, General Manager of Nissan’s Advanced Driver-Assistance Systems Development, gave his evaluation of lidar in an interview with Reuters:

Currently, lidar lacks performance that exceeds the latest technologies of radar and camera. If lidar can reach the level of application in our (car) system, it would be great. However, this is not the case, and its cost and performance are not matching.

At the same time, behind Tetsuya Iijima was a PPT of perception cameras that cut through lane markers and detect obstacles in front of the vehicle. It seems easy to conclude that “Nissan sides with Tesla and has little interest in lidar.”

However, in an interview with The Drive, Kunio Nakaguro, Senior Vice President of Alliance Product Development at Nissan-Renault-Mitsubishi, further explains: we do not believe that the current lidar can provide better performance, and we do not think it is necessary to deploy lidar today.

Here, in fact, Nissan’s attitude toward lidar differs from Tesla’s consistent anti-lidar stance. And Nissan’s subsequent response by public relations makes Nissan’s disagreements with Tesla even more evident.

Nakaguro’s evaluation of lidar only applies to ProPilot 2.0, an assisted driving system delivered in mass-produced vehicles, and his derogatory comments about lidar do not apply to all levels of autonomous driving systems.

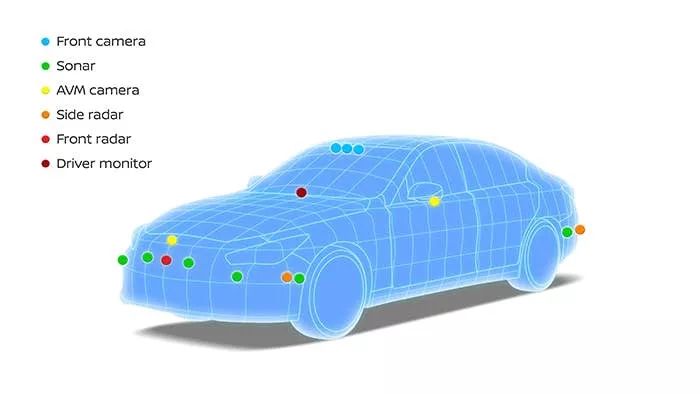

This also suggests that as the cost of lidar decreases and its performance and reliability improve, Nissan is likely to apply lidar in L3/4/5 autonomous driving systems in the future.However, Nissan’s announcement also included the layout diagram of ProPilot 2.0 sensors, which are distributed as follows:

-

5 radars (1 front + 4 corner)

-

8 cameras (3 front-facing + 4 AVW + 1 DMS)

-

12 ultrasonic sensors

On the other hand, the sensor layout of Tesla Autopilot is as follows:

-

1 radar (front-facing)

-

8 cameras (3 front-facing + 4 side-facing + 1 rear-facing)

-

12 ultrasonic sensors

At first glance, it seems that Nissan ProPilot 2.0 also values visual perception, as it has four more corner radars than Autopilot, which makes it safer.

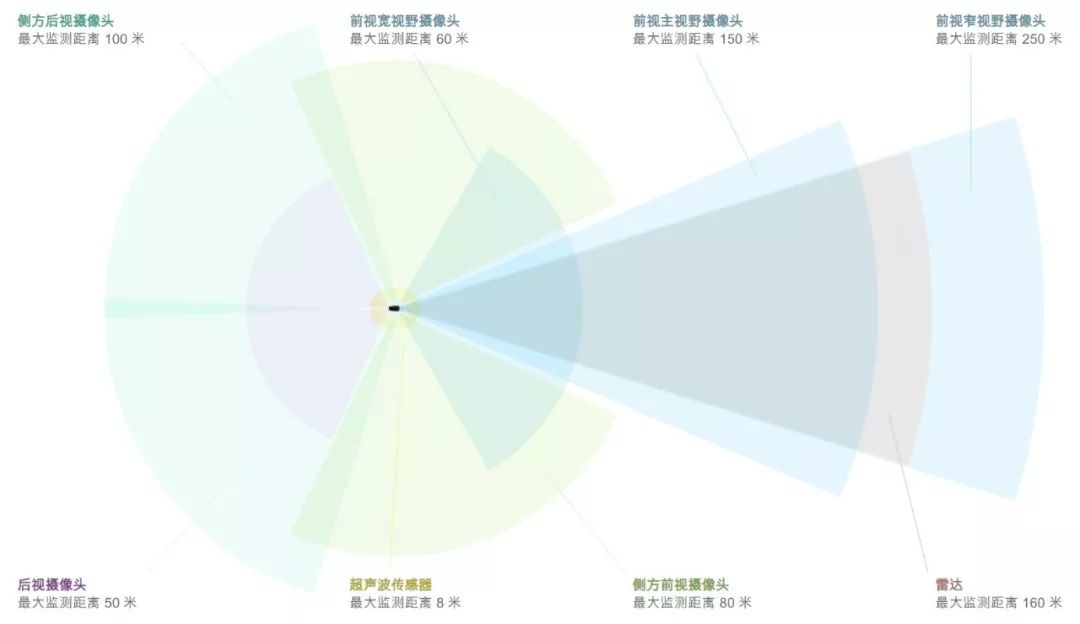

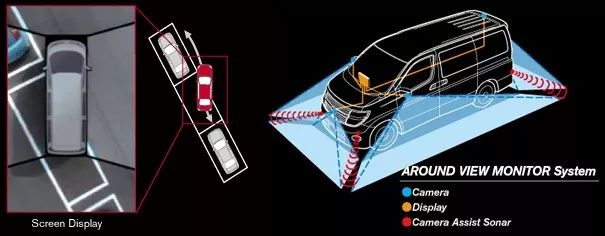

However, this is not the case. The mystery lies in the four AVM cameras on ProPilot 2.0. AVM stands for Around View Monitor, which is a 360-degree panoramic system independently developed by Nissan. That is to say, these four cameras are panoramic cameras with a wide-angle view and are difficult to participate in the perception of high-speed ADAS scenarios (except for automatic parking). Even if they are involved, their ability boundaries are very limited.

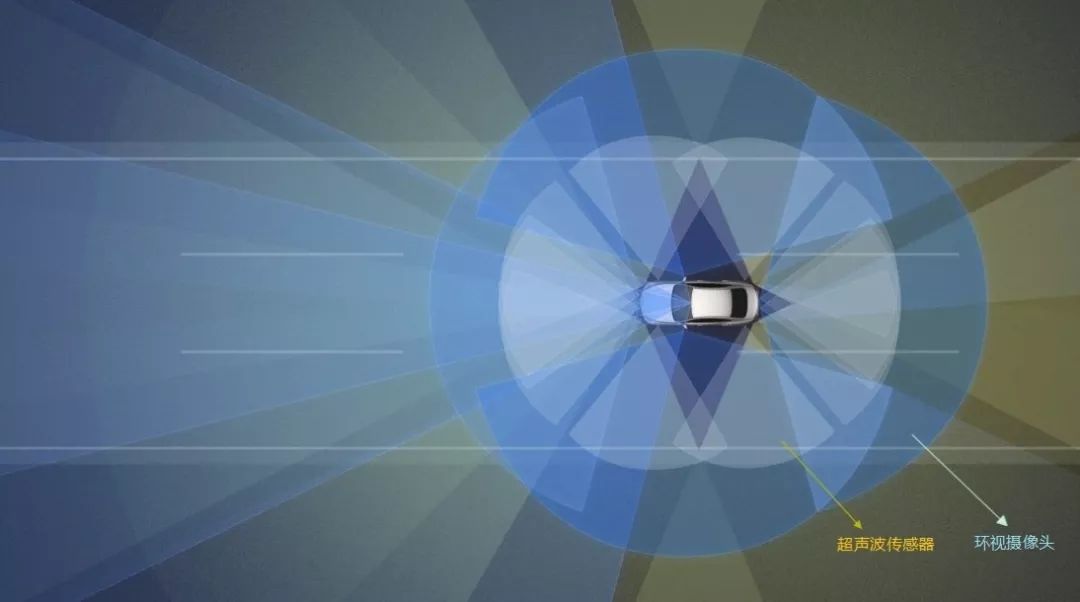

These two illustrations below clearly show the huge difference in detection distance between the panoramic cameras and the perception cameras used by Tesla.

How powerful is ProPilot 2.0?

According to Tetsuya Iijima, ProPilot 2.0 makes Nissan the leader in the field of automatic assisted driving, which is a key strategy for Nissan’s brand building.

All of the functions are the world’s highest level,It is going to be very difficult for others to top this and overtake us. We have integrated the most advanced-level technologies.

All of the functions are at the world’s highest level, and it will be quite difficult for others to reach and surpass us. We have integrated the most advanced-level technologies.

What are the “world’s highest-level” and “most advanced-level” technologies? According to the official statement, ProPilot 2.0 is the world’s first assisted driving system that combines highway navigation driving and single-lane hands-free driving.

Here is the specific meaning of “highway navigation driving”.

This system is specially designed for automatic driving in/out of highway ramps. By combining with the vehicle navigation system, the system assists the vehicle to travel on the designated road according to the predetermined route. The system supports assisted driving on multi-lane roads, assisting with overtaking, changing lanes, and exiting lanes.

“Single-lane hands-free driving” is as follows.

When driving in a single lane, the driver only needs to focus on the front road conditions, and when unexpected situations occur on the road, traffic, or vehicles, the driver can take over at any time to manually control the steering wheel. The driver can drive hands-free in a single lane.

When the driver wants to change lanes, both hands are placed on the steering wheel and the turn signal is activated. Once the system confirms that the current road conditions support lane changes, the system will assist the vehicle in automatically changing lanes.Here is the English Markdown text, with professional translation and HTML tags preserved:

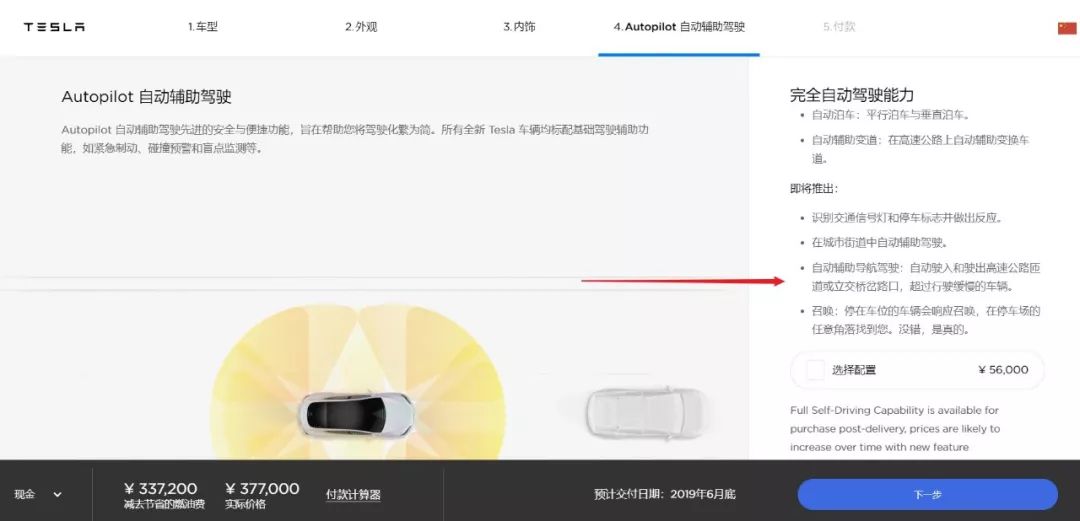

We’re discussing Navigate on Autopilot, the feature released by Tesla Autopilot, which is defined as “Automated Assistance Navigation Driving”. This feature enables Auto Lane Change, Auto Entrance and Auto Exit for highways and overpasses, and overtaking slow-moving vehicles.

In other words, ProPilot 2.0 is also capable of this function, which is the most significant differentiation feature of Autopilot compared to other ADAS systems. Considering Autopilot’s uniqueness in the industry, ProPilot 2.0 is still impressive.

So, how did Nissan achieve this?

Nissan + Mobileye + Zenrin = The Best Japanese ADAS?

It was said that the detection range of the surround camera is much lower than that of the perception camera. However, ProPilot 2.0 has four additional corner radar compared to Autopilot, which is the biggest difference between the two companies in terms of perception routes. Elon Musk represents those who have strong faith in the computer vision+AI potential, while Nissan is more balanced and conservative in its choices between cameras and radars.

Does this mean that ProPilot 2.0 doesn’t value vision at all? This issue needs to be viewed from two different aspects.

When comparing with Tesla, it seems that Nissan does not value vision as much as Autopilot, but among traditional car companies, Nissan is the one that values vision the most.

Tetsuya Iijima said at the press conference that the three front cameras of ProPilot 2.0 are a unified three-lens camera, composed of fish-eye short-range camera, main-view medium-range camera, and narrow-view long-range camera, which together complete long-range and three-lane perception coverage. The supplier of this feature is the vision perception leader, Mobileye.

The application of the three-lens camera solves the perception issues of multi-lane perception in front of the car, which is the basis for the implementation of the automatic lane switch function.

Although the release of Autopilot in 2016 caused a rift between Tesla and Mobileye, Mobileye did not stop on its path of visual perception. After Tesla, there are still companies using the EyeQ series chips made by Mobileye to support ADAS systems’ visual perception. Among the traditional giants which selected the Mobileye chip to handle the perception processing of a three-lens camera, Nissan was the first.Nissan mentioned another detail in the announcement of ProPilot 2.0, in addition to the camera, radar, ultrasonic sensors, and GPS, it also employs high-precision maps provided by a veteran Japanese map-making company, Zenrin, which offers 360-degree real-time information and the precise location of the vehicle on the road.

Combining with the news below, all the clues are connected.

In April 2017, Nissan announced that it joined Mobileye’s REM crowd-sourced mapping program. Prior to this, Japanese map-maker Zenrin had already joined the REM crowd-sourced mapping program.

The so-called REM, which stands for Road Experience Management, is a program initiated by Mobileye for various automakers’ vehicles equipped with mobile cameras to upload road data in a crowd-sourced manner, jointly develop, and maintain high-precision maps. The mapping plan achieves high-precision positioning by capturing road signs and map data through the camera.

In the beginning of their collaboration in April 2017, Mobileye mentioned that “Commercialization of high-precision maps will be achieved in Japan in mid-2018,” although the actual landing time was a bit later. Visual perception ability from Mobileye, decision-making, execution, and supplier integration abilities from Nissan, as well as high-precision map data ability from Zenrin jointly formed the representative work of the assisted driving system in Japan, other than Autopilot beyond Tesla.

Going back to the beginning of the article, why do we say “the other camp represented by ProPilot 2.0 may become a strong competitor to Tesla Autopilot in the future?” Unlike Tesla’s aggressive and thorough vertical integration and independent R&D strategy, Nissan has taken a path of aggregating the industry’s strongest resources and working with others. It has built a set of theoretically competitive systems.

Elon Musk once said that Tesla has 100 times more data advantages than its competitors, and Tesla’s global road test fleet scale will soon reach 500,000 vehicles, while the improvement of AI neural network algorithms heavily relies on massive data training.

If Mobileye collaborates with automakers and map makers in every segmented market around the world to support a ProPilot 2.0, would Tesla still have such a significant data advantage?

At CES 2018, Mobileye announced its cooperation with NavInfo, based on the REM crowd-sourced mapping program. How far is China’s ProPilot 2.0?“`markdown

“`

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.