Yesterday, Elon Musk made another important announcement on Twitter:

“Tonight we’re pushing a major Autopilot software update to North America customers. Version 9.0 adds a lot of functionality and introduces a new Navigate on Autopilot feature. More about this in the blog post coming soon.”

So even though we’ve previously discussed Autopilot V9.0 in a dedicated article (http://mp.weixin.qq.com/s?_biz=MjM5NTIyMjA2MQ==&mid=2656745942&idx=1&sn=dbe41e488f9e178a1b891b36b7b484c4&chksm=bd55de948a225782f8d5748af596ed03fbbb85bf4167183c57d7fc828411d98de3b9a1a0791a&scene=21#wechatredirect), we’re still focused on it today.

Why talk about Autopilot V9.0 again? First of all, the previous article was more technical and some readers didn’t fully understand it. Secondly, the most important feature of V9.0, Drive on Nagivation, was actually running in shadow mode in previous versions, and this feature is the core topic of this article. Finally, Tesla Autopilot, as well as other advanced driver assistance systems and L3 level autonomous driving systems, have always faced a lot of controversy, with many car owners, industry insiders, and the public having various questions, which this article will also address.

The first question: What is Drive on Nagivation?

Tesla specifically blogged about Drive on Nagivation yesterday (which shows that the official also attached enough importance to it), but the name has been adjusted from Elon’s naming on Twitter to Navigate on Autopilot. This adjustment was done particularly well.

You see, Drive on Nagivation means “follow navigation”, what does this function do? However, Navigate on Autopilot translates to “navigate on Autopilot”.

Take a look at the official definition:>Our most advanced Autopilot feature to date, Navigate on Autopilot, is an active guidance feature that, under driver supervision, guides a car from a highway’s on-ramp to off-ramp, including suggesting lane changes, navigating highway interchanges, and taking exits. Its design goal is to make driving on the highway with Autopilot more convenient and efficient in finding the most direct route to your destination.

It’s no secret that the numerous misuses of Autopilot have made Tesla cautious. To put it plainly, here’s how it works: you drive onto the highway, input your destination, activate Navigate on Autopilot, and it takes control of the steering wheel (yes, you read that correctly), accelerator, and brakes, performing all driving operations until the vehicle exits the highway. However, it’s important to note that the driver must monitor the car at all times.

Below is the official demonstration video.

This covers the basics of what Navigate on Autopilot does, but it brings up another question: I mentioned that Autopilot takes control of the steering wheel, but in the video, the driver is gripping the wheel. What’s going on? We’ll explain later.

Second Question: How does Tesla approach driving safety?

This is a very large and fascinating question. In the public’s perception, Tesla’s development route of collecting road test data from vehicles sold around the world and developing new features seems like “Treating users as guinea pigs, ignoring life safety, and conducting a massive and reckless industry experiment in autonomous driving…”

How does Tesla really view safety? Interestingly enough, it was disclosed during the recent Tesla Q3 earnings call, and we can talk about that.

The Q3 Tesla earnings call was quite interesting, of course, Elon Musk opened the call and the first executive to speak after him was Tesla’s Chief Safety Engineer, Madan Gopal.Here is the English Markdown text:

Let me first provide some basic background knowledge: up to now, Tesla has developed and delivered a total of three car models: Model S, Model X, and Model 3, all of which have received the five-star safety ratings from the top three safety rating agencies in the USA, IIHS and NHTSA, and Europe, E-NCAP.

However, Model 3 is still different from the other two big brothers. Madan Gopal explained that since 2011, there have been 943 cars that have received the five-star safety ratings from NHTSA, and Model 3 has the lowest injury probability among these 943 cars.

What comes next is crucial:

Let me say more about how we view safety. It’s Elon’s top concern. You can look at how Model 3 performs in the most difficult test in center highway safety, which is the one risk of occupant injury. That’s not only the door frame, but also the pole that comes in from the side, the car has to handle that without the pole braking into the cabin. This is not something the ratings agencies have tested directly, but we found in internal testing it’s the most difficult one. I just want to say, that safety is paramount, and Tesla is committed to making it the top priority. Above any rating or test, Tesla’s real-world safety record is unmatched by any other automaker.

Let me recap: up to now, Tesla has developed two platforms (Model S/X are on the same platform), and based on the second platform, Tesla has made the safest car model among the 943 five-star safety car models. It took a lot of efforts to achieve this result. Elon explained on Twitter before that the body design of Model 3 could have been simpler, and most of the quality differences come from the higher safety than other car models.

So how does Tesla view safety? Safety-First. This expression may sound official, and most automakers will talk about it. However, Tesla does have car model safety to support this claim.

The third question: Why does Autopilot need to be updated iteratively?

This is the most critical question of this article because Tesla’s ability to update Autopilot iteratively is closely related to the whole vehicle OTA mechanism. This makes Tesla face various criticisms, such as using users as guinea pigs, treating life as a child’s play, launching products without full functionality, and requiring users to help develop functions after the product is launched. However, on the other hand, Tesla has surpassed NHTSA in safety crash tests and achieved the safety of the safest car models. This is quite contradictory.I’ll try to answer Tesla’s logic about why they chose to go from the assisted driving system to the autonomous driving system through iterative updates, instead of directly developing an autonomous driving car. Elon has actually explained this, as there have been several tragedies caused by user misuse of the Autopilot system.

“Our current assisted driving system has been proven to provide better safety than human driving, with a road fatality rate one-third of the world average (according to NHTSA statistics). Even if our system is just 1% better than human driving, we can save 12,000 lives among the 1.2 million people killed in car accidents each year. So, I think that if you have such a safety technology, hiding it just because of public pressure or legal prosecution is morally wrong.”

NHTSA Report: Use of Autopilot Reduces Accident Rates by Almost 40%.

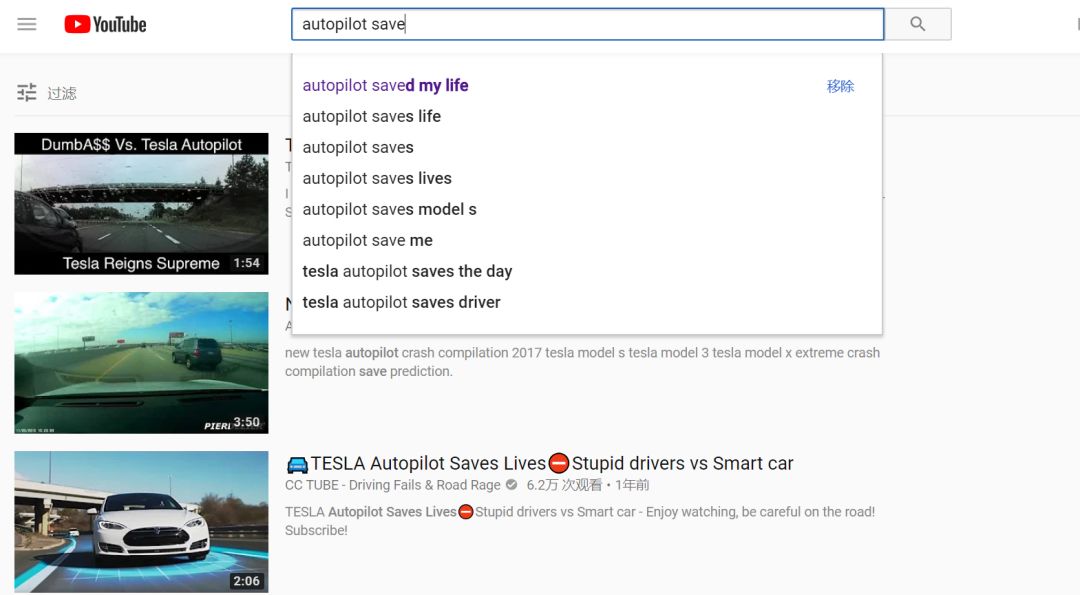

We often see news reports of Tesla car accidents resulting in fatalities, but if we search on YouTube, there are also many cases of owners using Autopilot to avoid accidents. Overall, Autopilot helps human drivers in terms of driving safety, and this level continues to improve, which is why Autopilot constantly iterates and updates.

This explanation seems to be consistent with the logic behind developing a five-star safety car model: to always provide the safest car for users.

Fourth question: What is the next challenge for Autopilot?

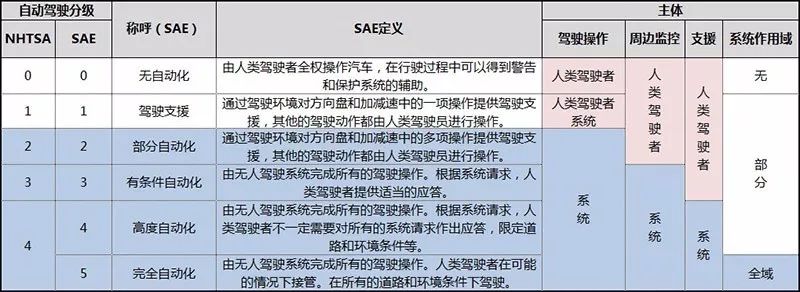

From a user perspective, Autopilot is becoming smarter and taking over everything, automatically performing all driving operations. According to the International Society of Automotive Engineers’ classification of autonomous driving levels, Navigate on Autopilot belongs to the standard L3 level of autonomous driving systems.

L3 level autonomous driving systems execute all driving operations, but the human must still be ready to take over the vehicle when the system requests it.

Let’s compare the other levels: L1 and L2 require the driver’s attention and the system is only an assistant, with the driver being responsible. Whereas L4 and L5 do not require driver participation, with the system fully taking control and being responsible.

Let’s compare the other levels: L1 and L2 require the driver’s attention and the system is only an assistant, with the driver being responsible. Whereas L4 and L5 do not require driver participation, with the system fully taking control and being responsible.

However, only L3 has the system taking over driving duties intermittently, requiring the driver to relax and watch movies or stay focused on driving the car, with responsibility shifting back and forth… Now you can understand why Li Xiang thinks L3 is not user-friendly.

Engineers tend to have limitations when it comes to level design, and they often fail to consider the challenges that exist in real-life scenarios. That’s why many car companies including Byton, General Motors, Volvo and Ford have announced their abandonment of L3 autonomous driving system development and instead will simultaneously promote the development of L2 and L4 autonomous driving systems. Although the technical challenge of L4 is greater, its responsibility is very clear, avoiding the endless difficulties of L3.

But isn’t the Audi A8 the world’s first car equipped with an L3 autonomous driving system? Isn’t Audi afraid of hidden dangers?

Regarding this issue, I can only say that it is the triumph of Audi’s marketing department, which has allowed Audi to leapfrog both Tesla and Waymo in terms of publicity in the field of autonomous driving. After all, the former only has a driving-assist system and the latter’s autonomous driving car has yet to be mass-produced.

In fact, how could Audi not know about the dangers of L3? The Audi A8’s autonomous driving system works like this: the system takes control of the car and performs all driving operations only when traffic is congested and the speed drops below 60 km/hour; once the speed exceeds 60 km/hour, the system issues a warning and stops intervening.

When can you drive Audi A8 at a speed of less than 60 km/hour for a long period of time? Therefore, this is a feature with a very narrow application. Conversely, can’t Tesla and GM achieve autonomous driving at a speed of less than 60 km/hour?

This is the success of Audi’s marketing department.

Moving on to Tesla, will Tesla’s Autopilot transition from L2 to L4? This is a complex problem that depends on developments in computer science, AI deep neural networks fed by ultra-large-scale data, sensor performance, and chip computing power in multiple fields. However, regardless of these factors, Tesla still has an unresolved problem – redundancy systems.## What is Redundant System?

Redundant system is a design in which two or more identical and relatively independent configurations are adopted to increase the reliability of the system. For example, in the process of driving a car with an autonomous driving system, if the brakes fail, a separate and independent braking system will immediately intervene to ensure the stable operation of the system.

Today’s Tesla models do not have a complete redundant system required for autonomous driving. The 42nd Garage Community member and automotive electronic engineer, Cold Melon (Weibo ID: Cold Melon), commented that the architecture supporting the autonomous driving system generally has two backup (redundant) designs, power supply and bus communication, covering at least steering, braking, and power drive. In fact, these can be collectively referred to as redundant power and actuators, in addition to which redundancy is also needed for perception, positioning and computing power. In these areas, Tesla has not done much work.

However, at the technical level, we can see that Tesla is advancing the landing of functions such as Navigate on Autopilot. Although some users have criticized the new system for not being good enough, don’t forget that Tesla’s Autopilot 2.0 hardware went from “almost unusable” to the best-assisted driving system on the market in just a year.

According to Stuart Bowers, Autopilot Engineering Vice President, Tesla plans to land autonomous driving technology in the next 6-12 months. What will happen in the next 6-12 months? Tesla’s AI chips, which have reached the level of redundant design, computing power and fault tolerance needed for autonomous driving, will be mass-produced and launched on the market.

So, how does Tesla solve the problem that the responsibility subject of L3 autonomous driving is not clear and L4 autonomous driving lacks redundant design?

At the Q2 financial report conference, Stuart Bowers was asked “when will the technology of autonomous driving from the east coast to the west coast of the United States land?” and proposed an interesting concept:

it’s taking those same kind of features we’ve been working on, probably deploying them in the form of active safety features. That’s like a thing we can do already to understand like – use this rich understanding of the environment to actually try to keep you safer.> The Autopilot will have functions similar to the self-driving system we have been developing, and we may release it as an active safety feature. Just like what Autopilot has been doing, it ensures your safety by understanding the surrounding traffic conditions comprehensively.

What does it mean to “land the self-driving system as an active safety feature”?

Active safety features, as opposed to passive safety features such as seat belts, airbags, and crash structures, mainly refer to functions such as ABS, ESP, lane departure warning…and assisted driving systems such as Autopilot and General Motors’ SuperCruise.

Therefore, the interpretation is as follows: Tesla is confident that it can achieve the landing of self-driving technology in the next 6-12 months. However, Tesla’s current vehicles do not have redundant systems. Shouldn’t it be pushed to users first?

No, Tesla’s product philosophy on Autopilot is to push it to all users as soon as the system’s safety improves. The system will become more and more intelligent until it achieves self-driving. However, even when Autopilot evolves to the self-driving stage, users must continue to monitor the surrounding traffic conditions, as Autopilot currently does not have a redundant system – now you know why the driver’s hand cannot leave the steering wheel in the official demo video at the beginning of the article, right?

You may ask, if the car can self-drive, why do I still have to hold the steering wheel? CBS host Gayle King asked Elon this question, and his answer was because the safety of Autopilot supporting self-driving is ten times higher than that of human driving.

It can be said that starting from Autopilot V9.0, the self-driving field has ushered in a major variable. Interestingly, during the recent Alphabet earnings conference call, Alphabet CFO Ruth Porat revealed that Waymo’s self-driving cars have started paid operations. How to cover the world from a few cities is one of the main challenges facing Waymo.

The show has just begun.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.