In October 2016, Tesla announced the mass production of vehicles equipped with Autopilot 2.0 hardware. Two years later, today, Tesla has begun to roll out the Autopilot 9.0 (2018.40) version system update to car owners worldwide. Starting from version 9.0, these advanced standard automatic driving suites have officially ended their “idle” state and will play a role in Tesla’s journey towards L4 level autonomous driving.

First, let’s take a look at a video that shows the latest version of Autopilot’s performance on mass-produced cars: perceiving and merging road information, path planning and decision-making, and finally completing control and execution. The basic steps of an L4 level autonomous driving demo have been presented.

Camera First: An Almost Impossible Challenge

Although many car owners are eager and anxiously waiting for the update after watching the video, it can be found that the Autopilot team is at least one year behind Tesla’s plan. Elon Musk’s promise to demonstrate fully automated driving technology from California to New York, a distance of 4,500 kilometers, by the end of 2017 has been delayed until the release of Autopilot 10.0.

Therefore, the Autopilot team is undoubtedly the “king of Tesla delay” surpassing the Model 3 project team. Why did it take two years to achieve such technical capabilities?

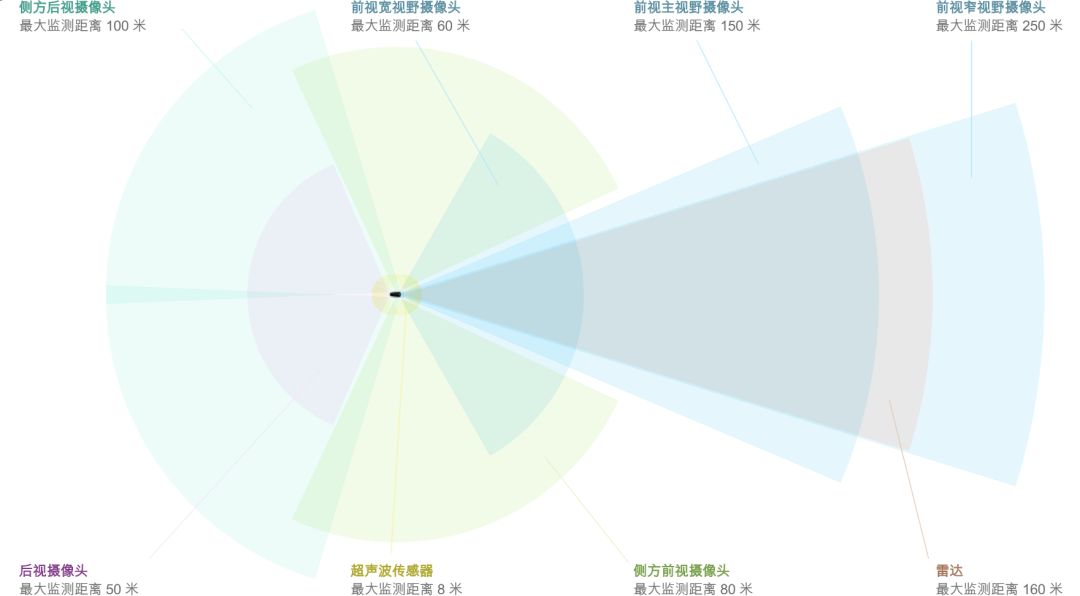

Let’s take a closer look at Autopilot 2.0 hardware today:

-

3 front cameras (wide-angle (60 meters), telephoto (250 meters), mid-range (150 meters))

-

2 front side cameras (80 meters)

-

2 rear side cameras (100 meters)

-

1 rear camera (50 meters)

-

12 ultrasonic sensors (double the detection distance/accuracy)

-

1 enhanced front radar (160 meters)

-

Tesla Vision visual processing tools based on deep neural networks

-

NVIDIA custom Drive PX2 computing platformActually, it’s easy to find out that it’s not just about the lack of LiDAR, Tesla Autopilot 2.0 hardware only uses one millimeter-wave radar. Compared with NIO Pilot equipped with 5 millimeter-wave radars and the XPeng G3, which has heavy Tesla genes and is equipped with 3 millimeter-wave radars.

Considering that the detection distance of ultrasound sensors is only 8 meters, cameras play a very, very important role in the perception of Tesla’s autonomous driving cars.

It can be said that Elon Musk’s guidance for the Autopilot team after taking over is: 360° visual perception and stingy ultrasonic and millimeter-wave radar perception + computer vision science + deep neural network = autonomous driving.

This plan vividly embodies Elon’s first principle thinking that starts from the most basic physical principles. The perceptual and computing capabilities of Autopilot 2.0 hardware have exceeded human beings, and theoretically speaking, after making up for software technology capabilities, the driving capabilities it can achieve should also exceed that of human beings. More precisely, “ten times that of a human being” (Elon’s words).

It has to be said that this vision is very idealistic, and in the actual implementation process, there are countless pitfalls waiting for Tesla, whether it is from the software level or the hardware level.

First of all, from the software level, many top computer vision industry experts who come out of prestigious universities believe that there are still obvious bottlenecks in this field itself. The CEO of an autonomous driving start-up once commented that, at this stage, it is necessary to insist on a redundant multi-sensor fusion solution. With the current computer vision technology, anyone who brags about how powerful their deep learning is and claims that they can do low-cost autonomous driving with just a camera is a rogue.

In fact, more than 90% of autonomous driving practitioners agree with this view, namely a redundant multi-sensor fusion is the only feasible solution for commercializing autonomous driving at this stage.

Here, I want to mention an interesting point, listing several Autopilot team leaders:

- Project Founder Sterling Anderson

- VP of Software Chris Lattner

- VP of Software Jinnah Hosein

- VP of Software David Nistér

- Software Manager Sameer Qureshi

- Project Manager Jim Keller

- VP of Engineering Stuart Bowers* Autopilot Director of Vision/AI, Andrej Karpathy

These people have two things in common: they are all top talents in the fields of robotics/computer vision/hardware development that span academia and industry, and their previous career had nothing to do with the field of autonomous driving.

Therefore, I have an irresponsible guess that Elon’s insistence on the camera-first strategy for landing autonomous driving is so extreme and radical that true technical experts have little interest in joining the Autopilot team to take on this nearly impossible challenge.

Elon boldly hired those talents who have strong abilities but little knowledge about the field of autonomous driving. However, obviously he himself underestimated the challenge of achieving autonomous driving primarily with cameras. This is the reason why it took Tesla two years to achieve very basic autonomous driving demos, and six of the above eight people chose to resign after realizing that this was an unprecedented big pit.

What is so difficult about camera-first?

Among all autonomous driving sensors including LiDAR, cameras have the highest linear density. Even for manufacturers who follow the multi-sensor fusion technology route, the importance of vision-based perception in the entire autonomous driving system continues to increase. Due to the highest image information density, cameras gradually occupy the central position of perception fusion.

What is the difficulty in achieving autonomous driving perception with cameras? According to very simple first-principle logic, there is nothing difficult: human driving relies mainly on image perception of the human eye, and Autopilot 2.0 has a total of eight-camera images + front millimeter-wave radar, which should be more powerful.

But theory and reality are two different things.

From an engineering perspective, first of all, the perception performance of cameras will sharply decrease in complex working conditions, such as heavy rain/snow, night driving, and rapid changes in light. This is a complex challenge faced by all autonomous driving practitioners, and until it is thoroughly solved, autonomous driving cars relying primarily on cameras will never be commercialized.

Moreover, Tesla faces some unique challenges.

With the mass production of models equipped with Autopilot 2.0 hardware, Tesla has established Tesla Vision, a visual processing tool, a new underlying software technical architecture, and a cloud-based big data infrastructure, and redefined the Autopilot feature roadmap.This means that all of the very professional automotive-grade product experience from Mobileye was completely abandoned in the Autopilot 1.0 era, which means that Tesla still faces the challenge of designing automotive-grade products on the engineering level, such as the Autopilot team needing to overcome dozens of demanding indicators required by automotive regulations, such as temperature, humidity, radiation, conduction, delay, and power supply signal surges, reverse connection, high voltage etc. Just for temperature, all components must run stably within a range of minus 40°C to 85°C.

This highly vertically integrated approach is very Tesla. In May 2016, the world’s first fatal accident caused by the improper use of Autopilot occurred in the United States. After that, the Tesla Autopilot team found Bosch, the radar supplier, and obtained higher-precision raw radar data through an update of the radar driver program, bypassing Bosch’s algorithm to push the radar-based Autopilot 8.0 update based on self-developed algorithms.

So behind the vertically integrated model that Tesla shareholders and fans admire there are numerous interdisciplinary engineering challenges.

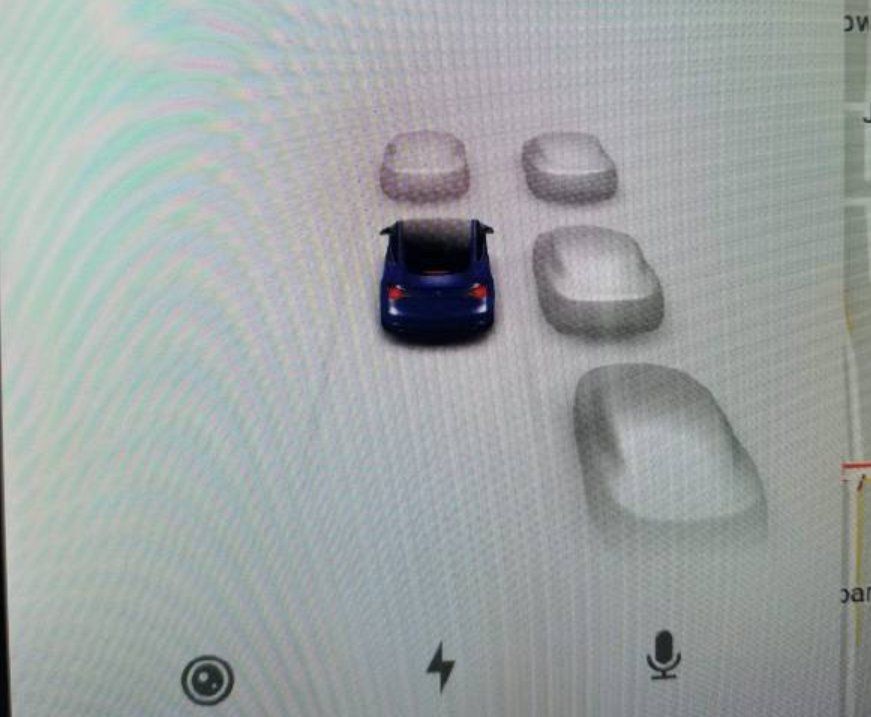

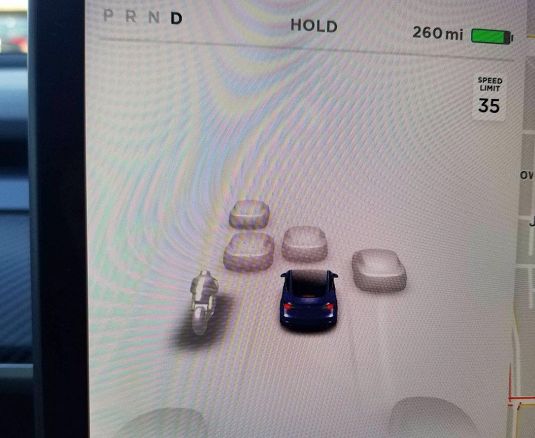

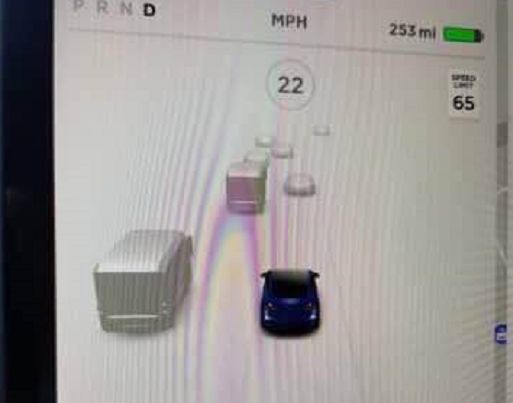

Now let’s talk about the software challenges. At the end of the above video, we can see that Autopilot 9.0 has already achieved basic coverage of three lane perception. In addition, other videos show that Autopilot 9.0 supports the identification of motor vehicles, motorcycles, and pedestrians on the side and rear of the car (side or rear camera recognition). This means that all 8 cameras of the vehicle have been fully enabled and have basic recognition capabilities for transportation vehicles.

What kind of challenge does this pose for software technical capabilities?Perhaps we need to take out Autopilot 1.0 hardware powered by Mobileye again for comparison. Autopilot 1.0 only relies on one front-facing camera for perception, while Autopilot 2.0 hardware has a total of three front-facing cameras.

Autopilot 1.0 is based on Mobileye’s single vision line, which works by identifying targets through image matching, and estimating the target’s distance based on the target’s size in the image. That is to say, the camera itself of the single vision line does not have the ability to recognize objects, and Mobileye must maintain a huge sample feature database to match all the targets captured by the camera.

However, it is impossible to match all targets, which means that single vision is powerless when facing many non-standard objects (such as a flying plastic bag).

On the other hand, the double vision, similar to the “human binocular” principle, calculates the distance from the disparity between the two images obtained by two cameras. That is to say, the system can judge the target’s shape, color and position without a database.

Tesla Autopilot 2.0 is the first model in the industry to adopt a three-vision perception solution, in which the long-focus camera with a detection distance of 250 meters is responsible for long-distance perception in high-speed driving scenes; the main camera with a detection distance of 150 meters is responsible for most of the mainstream perception scenes; and the fisheye camera with a detection distance of only 60 meters but a detection width of 120 degrees is responsible for detecting traffic lights, road obstacles, and most close-range targets.

The three-vision perception most closely simulates the characteristics of human binoculars with rapid focus changes and coverage of long and short distances, but at the same time, it brings huge challenges to the software and engineering departments.

From a software perspective, the three-vision perception departs from the old route of single vision and database matching, and turns to real-time calculation of the disparity distance, which requires higher computing power for the vehicle. Mobileye equips the laboratory with three EyeQ3 chips to process three streams of image data.

Considering Tesla’s “light radar, heavy camera” route, cameras need to calculate and identify more than 90% of environmental information, including motor vehicles, non-motor vehicles, motorcycles/bicycles, pedestrians, animals, road signs, roads, traffic lights, lane lines, etc., in real-time. The algorithm complexity and calculation power required for three-stream image recognition and fusion have doubled.

The engineering challenges of three-vision perception far exceed those of software challenges.Although monocular vision requires updates and maintenance of a large database, due to the challenges in algorithms and engineering, more human-like binocular vision cannot surpass monocular vision to become mainstream in the next five years.

On the other hand, trinocular vision is a scheme that has never been applied to mass production vehicles in the industry. It is a challenge that integrates three cameras at the cramped front windshield and must consider the rangefinder error caused by thermal expansion and contraction. The challenges in terms of vehicle regulation, manufacturing process, cost, reliability, and accuracy are unprecedented.

Considering that Tesla’s autonomous driving software and engineering R&D were relatively weak compared to Waymo, Velodyne, Mobileye, etc. in October 2016, Tesla’s choice of trinocular vision is somewhat “overconfident” in spirit.

Of course, the challenge is undoubtedly difficult, which may explain the frequent executive turnover in the Autopilot team over the past two years.

Autopilot 9.0 Version

As we can see from the video above, Autopilot 9.0 version has basically achieved perception and fusion of road conditions in simple scenarios such as high-speed/intercity highways, planning and decision-making of paths, and finally completed control and execution.

In other words, this is a very primitive L4 level automatic driving technology demo. The difference is that other companies’ L4 demos are in promotional videos, and Tesla has achieved them on mass-produced vehicles. This is probably why Elon thanked three core Autopilot executives at the Q2 earnings conference.

But it must be pointed out that there is still huge room for improvement in the technical capabilities of the Autopilot 9.0 version, and the scenes it can cover are extremely limited. In fact, Tesla’s attitude has become unprecedentedly entangled.

On the one hand, because of the wider vehicle perimeter detection than the driver, Autopilot has avoided countless accidents. There are various videos of Autopilot’s accident avoidance on YouTube.

On the other hand, more than 90% of the dozens of Autopilot fatal accidents from May 2016 to the present are caused by owners’ excessive trust in Autopilot. In the control and execution of autonomous driving, Tesla makes the algorithm very smooth, which to some extent leads to the driver feeling “boring” in the Autopilot state, and finally leads to accidents due to negligence.

Starting from 9.0, Autopilot has already technically supported taking over the steering wheel – changing lanes after taking over the accelerator and brake. This may cause more accidents to occur.

How to solve this problem?Apart from stricter monitoring of drivers’ attention, Tesla introduced the concept of Level 4 active safety features at the last earnings conference.

Here’s a brief introduction: One of the key differences between active safety features and L4 is that the main responsible party for cars driven under active safety features is the driver; while the main responsible party for L4 is the autonomous driving system.

At the last earnings conference, Stuart Bowers, Vice President of Autopilot Engineering, said that Tesla is confident in implementing L4 in the next 6-12 months.

During the period from 9.0 to the full implementation of L4, Autopilot, which is constantly improving, still has many scenarios where it is helpless, but it can also bring practical improvements to driving safety for users. Tesla can only force users to be the primary responsible party and always pay attention to the road ahead.

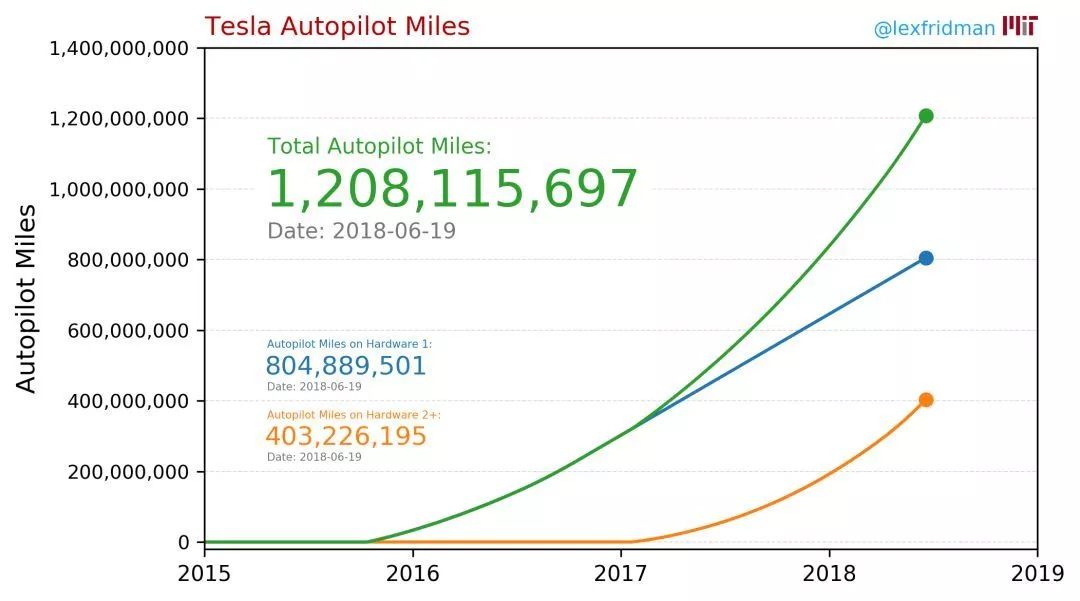

Is 6-12 months too optimistic? It needs to be pointed out that as of June 19th, the reserved quantity of Tesla Autopilot 2.0 models has reached 200,000, and the cumulative road test data has reached 400 million miles. Autopilot 3.0 hardware driven by AI chips will be mass-produced and put into use next year. At the same time, Model S/X with Autopilot 2.0 hardware, which has been in mass production since October 2016, and Model 3 with Autopilot 2.5 hardware can be upgraded to Autopilot 3.0 hardware for free.

It can be said that the release of Autopilot 9.0 version is the real involvement of Tesla in the research and development of L4 autonomous driving technology. Thanks to strategic deployments in data, chip, and AI fields over the past two years, once Tesla steps into L4, it has already entered a fast-developing racecourse.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.