This article is from 36kr, author: GentlemanZ, authorized to be reprinted.

This is not a topic worth discussing. Before this, the anti-LiDAR camp in the field of autonomous driving has been scarce, and the pro-LiDAR camp is absolutely mainstream. However, with the evolution of autonomous driving technology, the status of cameras in the entire perception layer has gradually risen. Let us try to explore the debate about the survival of LiDAR.

The birth of LiDAR for cars

Undoubtedly, LiDAR has become popular with the prosperity of the autonomous driving industry. The following is a list of global LiDAR company investments and financings organized by Lei Feng Net and Xin Zhi Jia.

As you can see, autonomous driving players Baidu, Ford, Daimler, Delphi, ZF, and Intel have all made layouts. In addition, Google’s Waymo announced in February last year that the cost of LiDAR has been reduced by more than 90%; General Motors announced the acquisition of LiDAR start-up company Strobe last October, which is said to be able to reduce the cost of LiDAR by 99%; the new production line of LiDAR giant Velodyne’s “Megafactory” has been put into production, and production capacity is expected to reach 1 million units per year.

So how was LiDAR discovered and used in autonomous driving cars? Before answering this question, let’s talk about the three major technology schools of the current autonomous driving perception layer, with the development of autonomous driving technology in parentheses:

- Google (2009), Baidu (2013): LiDAR is the main technology

- Apple (2016), Uber (2015), Roadstar (2016): Multi-sensor fusion

- Tesla (AP2.0: 2016), WeRide (2016), AutoX (2016): Camera is the main technology

The earliest wave of autonomous driving can be traced back to Google X’s autonomous driving project launched in 2009. Professor Sebastian Thrun was the founder of the Google X laboratory and is also considered the father of Google’s unmanned vehicles. Before joining Google, the autonomous driving team led by him at Stanford University won the championship in the second annual autonomous driving challenge initiated by the Defense Advanced Research Projects Agency (DARPA) in 2005. The Google X autonomous driving project once included a large number of members from the Stanford autonomous driving team. This team brought a sensing technology route dominated by LiDAR and cameras + other sensors as auxiliary to Google.The perception accuracy of LiDAR is higher than that of cameras, it can record richer details than cameras, so it was considered as the core sensor of the autonomous driving perception system at that time, but this technology route has obvious historical limitations. In fact, the computing power was not enough at that time, especially computer vision and deep learning had not made progress yet, and these reasons limited the imagination of practitioners for camera performance.

Autonomous driving startup Auto X founder Jianxiong Xiao said that whether it is in the past or now, the entire autonomous driving industry is dominated by robotics experts who often focus on developing reliable individual components and combining them for engineering design. Therefore, companies such as Google and Baidu have adopted the “heavy perception and light calculation” technology route, using LiDAR’s higher reliability and accuracy to simplify subsequent computing tasks and decision-making pressure.

So, with the development of computer vision, AI, and chip fields in the past two years, what is the performance limit of cameras?

The most outspoken opponent of LiDAR in the anti-LiDAR camp is Tesla CEO Elon Musk, whose latest evaluation of LiDAR for autonomous driving cars is “expensive, ugly, and unnecessary.” The first two issues will be solved with the capital investment and technological talent improvement of front-line companies, but “unnecessary” directly shakes the foundation of LiDAR’s existence.

Below are his views:

- Obviously, the road system is oriented towards passive optics. In order to achieve automatic driving in any given/changing environment, we must solve the passive optical image recognition problem very well. When you solve this problem, what is the meaning of active optical devices (LiDAR)? It cannot read road signs. In my opinion, it is like a crutch and will make those companies fall into a very difficult situation.

- If you insist on an extremely complex neural network technology route and achieve very advanced image recognition technology, then I believe you have maximized the solution to the problem. Then you need to integrate it with increasingly complex radar information. If you choose an active proton generator with a wavelength range of 400 nanometers to 700 nanometers, it is actually stupid because you have achieved this passively.

- You will eventually try to actively emit protons at a radar frequency of about 4 millimeters, because it can penetrate obstacles, and you can “see” the road conditions ahead through snow, rain, dust, fog, and anything else. What is puzzling is that some companies use the wrong wavelength to do active proton production systems. They have armed cars with a lot of expensive equipment, making cars expensive, ugly, and unnecessary. I think they will eventually find themselves at a disadvantage in competition.“`

An explanation of some basic physical principles was given very candidly. Leaving aside the parts that are difficult for the general public to understand, we can extract a knowledge point: theoretically, it is feasible to achieve perception through complex algorithms with a camera, but there are significant technical challenges in the algorithmic aspect.

Tesla has also been continuously improving its focus on the AI field. An interesting piece of information can prove this: after Autopilot software VP Chris Lattner resigned, the next leader of the Autopilot Vision team, Andrej Karpathy, became the Tesla AI Director.

Does anyone agree with his views? Yes, Dr. Du Yong of Yutong Technology, so he thinks that it is feasible to use vision alone to solve the problem of road perception for autonomous vehicles, but there is still a long way to go. The development process of autonomous vehicles should be a gradual replacement of high-end LiDAR by vision.

Why is the camera more suitable as the main sensor than LiDAR? In the actual driving process, most of the information that the driver obtains comes from vision, such as motor vehicles, non-motor vehicles, pedestrians, animals, road signs, the road itself, and lane lines around the vehicle. Studies have shown that about 90% of environmental information comes from vision.

However, the algorithmic challenge lies here. The camera needs to recognize and estimate various targets, which makes the learning algorithm based on object detection and recognition very complex. Fortunately, some exciting breakthroughs have emerged in the field of computer vision.

At the International Conference on Computer Vision (ICCV) in 2015, the Computer Vision and Robotics Laboratory team of Princeton University in the United States announced their research on a deep learning algorithm. Based on the algorithm architecture that appeared in their paper “DeepDriving: Learning Affordance for Direct Perception in Autonomous Driving.” This team directly perceives driving manipulation (driving affordance) through deep convolutional neural networks. While significantly simplifying the algorithm complexity, this greatly improves the robustness of autonomous driving algorithms, which is regarded as a major breakthrough in autonomous driving technology.

“`

The leader of this research is Dr. Jianxiong Xiao, the founder of Auto X previously mentioned. When representing Princeton University at ICCV to introduce their research, Dr. Xiao’s title was Assistant Professor at Princeton University, and founder of the Computer Vision and Robotics Laboratory. In the past three years, Dr. Xiao has initiated or participated in almost all research on 3D deep learning, and has been involved in releasing the largest public 3D dataset (ImageNet in 3D data) ModelNet and ShapeNet; created the foundational network framework Marvin for studying 3D deep learning, paving the way for future work; introduced 3D convolutional network Deep Sliding Shapes, studying 3D object features in RGB-D images…making Dr. Xiao a typical representative of the combination of academia and industry.

Various autonomous driving companies have released road tests demos, and among them, only Auto X is based purely on a visual solution. Dr. Xiao repeatedly expressed his point of view in previous interviews, “the potential of cameras has been underestimated. Theoretically, cameras can perform better than human eyes.” It’s worth noting that even the effective monitoring range of a 64-line LiDAR is difficult to surpass 200 meters, but human eyes can easily detect threats several hundred meters ahead.

Are there any big companies other than Tesla, Auto X, and NIO trying visual solutions for their autonomous driving technologies? Yes, Uber. The person behind this is Raquel Urtasun, head of Uber’s visual technology route.

In May 2017, when Uber’s Advanced Technologies Group was entangled in a lawsuit with Waymo, Uber announced the establishment of its Canadian branch, the Advanced Technologies Group Canada, where Urtasun and her team will focus on three areas of research: environmental perception and object recognition for autonomous driving technology, car positioning technology, and high-precision map drawing technology.

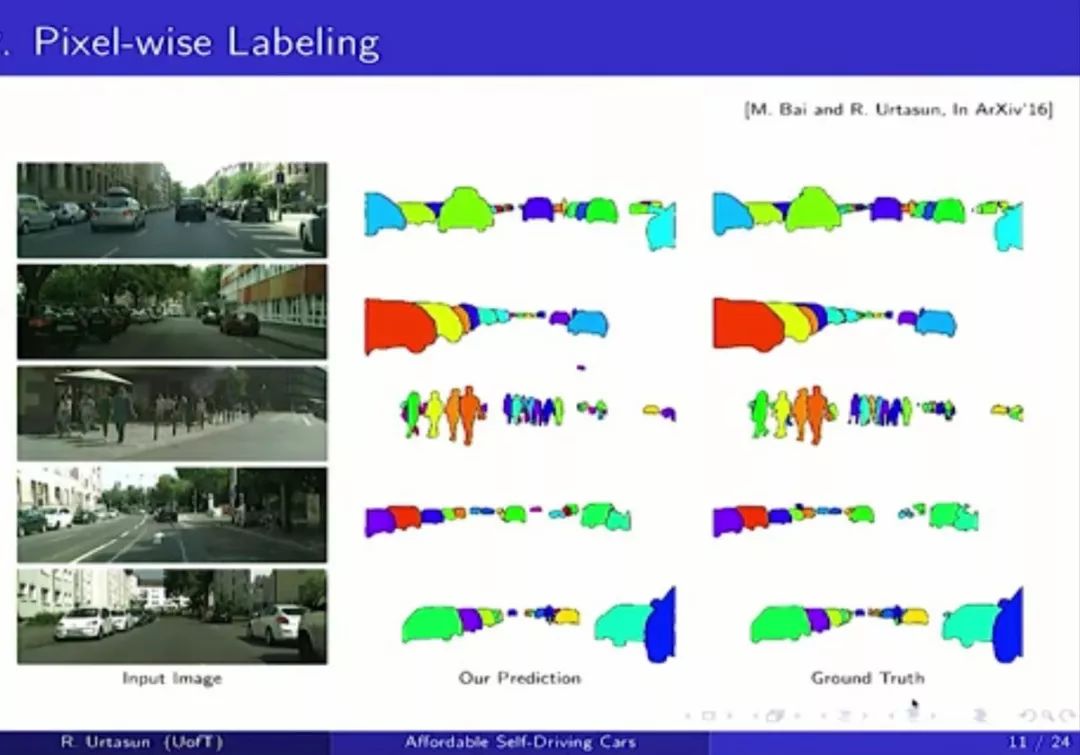

At that time, commentators believed that, considering the ongoing legal battle between Uber and Waymo, Uber’s move was a bet to preserve its strength and avoid direct conflict. However, no one realized that Uber was actually opening up a whole new, vision-based autonomous driving technology route.Urtasun, former associate professor at University of Toronto in the field of computer science, and president of the Canadian Machine Learning and Computer Vision Society, has spent years trying to reduce the importance of LIDAR in the field of autonomous driving perception. Her long-held belief, shared by Xiao Jianxiong and Elon Musk, is that the hope for the widespread adoption of autonomous driving cars is small if they cannot get rid of LIDAR.

As one of the “world’s top researchers in machine perception and artificial intelligence” (according to former Uber CEO Travis Kalanick), Urtasun found through her research that in some cases, vehicles can obtain 3D road information, which is captured by LIDAR, through ordinary cameras. Weeks after joining Uber, Urtasun presented her team’s research at a computer vision conference in New York, showcasing a system that can perform in real-time and match the performance of LIDAR in a range of 40 meters. However, this range is significantly lower than that supported by high-end LIDAR systems, so it appears that Urtasun’s team needs to continue its efforts.

Another reason why Urtasun is skeptical about the LIDAR route is because high-precision mapping, the time and cost of which is fundamentally hindered by LIDAR, is one of her research topics. This, in turn, impedes the widespread adoption of autonomous driving cars.

If you have been paying attention to the field of autonomous driving for a long time, you might also think of Amnon Shashua, the CTO of Mobileye. Although Tesla and Mobileye have split up, in terms of the economic considerations of developing autonomous driving ASIC chips (Eye Q5) and high-precision mapping production (REM architecture) based on camera-centric technology, these two companies remain surprisingly consistent. Today, Uber ATG’s Canadian team, led by Urtasun, has strengthened this camp.

Cameras have the richest linear density and far more data than other types of sensors. Li Xingyu, the Business Director of Horizon Robotics, mentioned that after many communications with major OEMs and large Tier 1 suppliers, the unanimous conclusion was that the importance of perception based on vision is continuously increasing throughout the entire autonomous driving system. Due to the advantage of having the highest image information density, cameras are at the center of the entire perception fusion.

Meanwhile, he also pointed out that visual perception still has its ability boundary, and the fusion of multiple sensors is actually the only way to achieve high reliability for commercial autonomous driving. In fact, the cost and performance of millimeter-wave radar and ultrasonic sensors have already supported commercialization. Therefore, the ultimate problem is whether or not there is a position for LiDAR in “multiple sensors” here?

Meanwhile, he also pointed out that visual perception still has its ability boundary, and the fusion of multiple sensors is actually the only way to achieve high reliability for commercial autonomous driving. In fact, the cost and performance of millimeter-wave radar and ultrasonic sensors have already supported commercialization. Therefore, the ultimate problem is whether or not there is a position for LiDAR in “multiple sensors” here?

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.