Believe you’ve been inundated with the news of the Tesla self-driving accident.

On May 7th, 2016, Joshua Brown, a 40-year-old man from Ohio, USA, was driving his Model S when he had a fatal collision with a large trailer at an intersection. Autopilot was enabled at that time. According to an interview with Frank Baressi, the driver of the trailer, the car owner was driving too fast to avoid the accident.

This is Tesla’s official explanation:

The Model S was driving on a two-way road with a median divider, Autopilot was enabled, and a trailer was crossing the road vertically.

Under strong sunlight, neither the driver nor the Autopilot system could detect the white body of the trailer, which resulted in the failure to activate the brakes in time.

As the trailer was horizontally crossing the road and was tall in height, the windshield of the Model S collided with the bottom of the trailer as it passed by.

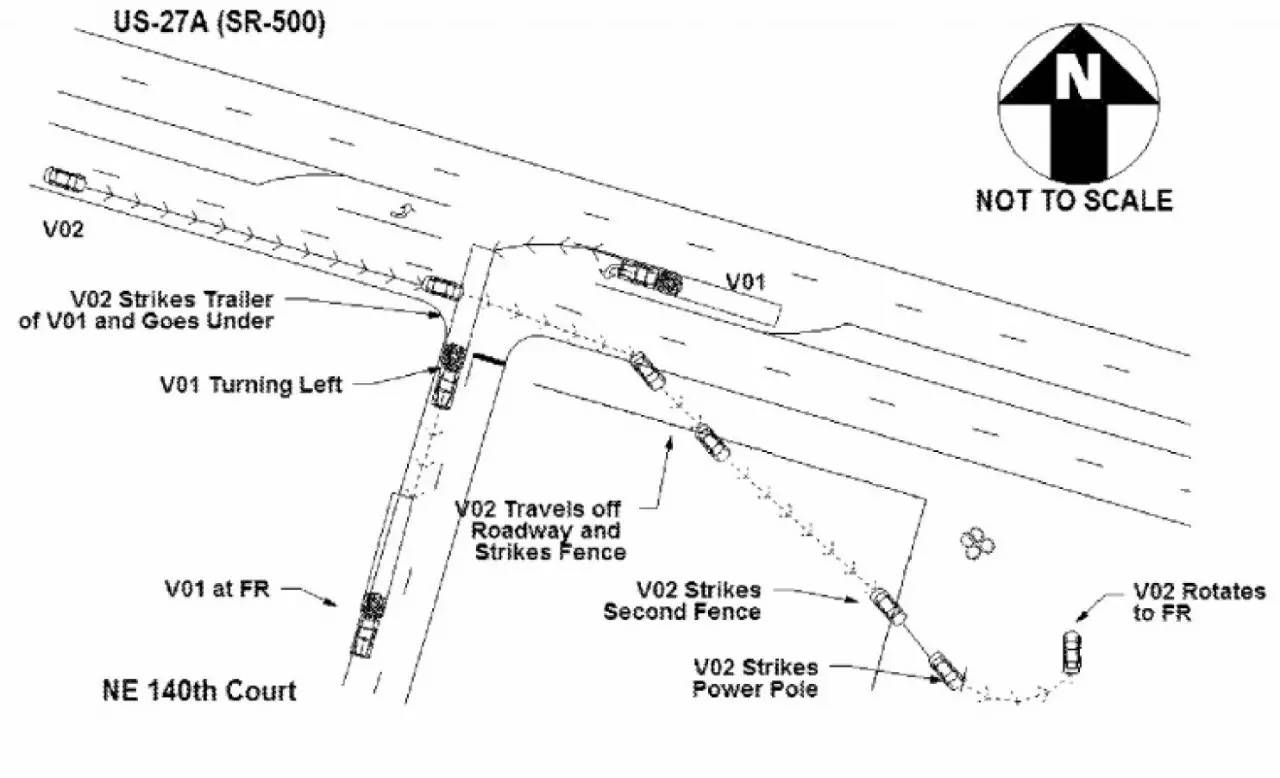

Diagram showing the accident analysis (please look at the direction of the arrows):

V01 represents the trailer, which was turning left at the time of the accident;

V02 represents Model S, which slid to the right after the collision.

There are several controversial points regarding this accident:

1. Why did Autopilot fail to recognize the trailer?

We know that the Autopilot system on the Model S is configured with a forward-facing camera from Mobileye, positioned in the middle of the windshield; millimeter-wave radar from Bosch, located beneath the bumper; and 12 ultrasonic sensors distributed around the car. Due to the cost of mass production, Autopilot did not use laser radar detection like the one on Google’s self-driving car.According to official conditions, strong sunlight and white car bodies are two reasons why the Autopilot system cannot detect obstacles. In addition, due to the height of the vehicle, it is speculated that this may exceed the detection range of the radar.

Musk clarified on Twitter that the radar identified a trailer as a traffic signal on the road without activating the brakes.

To put it in layman’s terms, Autopilot’s “vision” did not reach a sufficient distance, angle, and precision to judge this accident.

Just 10 hours ago, Dan Galves, a Mobileye executive, revealed the real problem of this collision. Current collision prevention technology or automatic emergency braking systems are only suitable for following and designed for problems that occur while following. That is, when a vehicle is laterally running, the current Autopilot system itself is not capable of sufficient judgment.

Galves emphasized that the current generation of technology is not capable of handling the accident that occurred on May 7. Mobileye will launch products related to lateral vehicle detection technologies in 2018. The European New Car Assessment Program will not join the relevant safety rating until 2020.

This statement is a strong counterattack against Tesla. It is worth noting that Tesla emphasized that this incident occurred under a “special circumstance” and compared the one incident that occurred after more than 130 million miles of autonomous driving with the one accident that occurs after an average of 94 million miles of US motor vehicle travel, implying the inevitability of this probability. Many people accepted this explanation.

However, Galves’ explanation reveals the limitations of Autopilot. This accident is not a “special circumstance” of traffic accidents, but a necessary situation of lateral vehicle encounters.

In addition, some experts in the industry believe that the use of laser radar and V2V vehicle communication technology may prevent this accident. However, this view is only for reference because there is no reliable experimental data to support it.

What is more intriguing is that last night, Mobileye announced that it will cooperate deeply with Intel and BMW in the field of autonomous driving.

- Is Autopilot misleadingly named?# Tesla’s Autopilot function has experienced accidents before.

At the end of May 2016, a European Tesla owner rear-ended a truck on a highway, generating attention on social media. Additionally, in the U.S., one Tesla crashed into a parked trailer and another on the Interstate 5 highway while driving with Autopilot enabled. Fortunately, no injuries were sustained in any of these accidents.

During a press conference, Elon Musk stated “It’s autopilot not autonomous,” even he does not recognize the translation of Autopilot as automatic driving. In more accurate English, it is better translated as “assisted driving”. However, this is not just a translation issue; in English-speaking environments, Tesla drivers spread Autopilot through social media, elevating it to a god-like status.

Joshua Brown, who died in the May 7th accident, was once an enthusiastic Tesla fan who posted videos following cars with Autopilot enabled on social media. Musk previously retweeted his Tesla successfully avoiding a truck video, but the retweet was deleted after the accident occurred.

While Tesla spreads successful Autopilot videos, it also emphasizes that drivers remember to keep both hands on the steering wheel, creating contradictions. Based upon the superficial understanding of technology (in fact, relevant technical popularization has not yet been widespread), fans inclined to promote Tesla, and exaggerate Autopilot suitability. At this point, car owners are not only remembering the name, but also the functionality in a scenarized scenario.

In such repeated spread, Autopilot has gradually become synonymous with automatic driving. Consumers will not remember your reminder, and they only love new and novel functions. This is the situation that Tesla must pay attention to.

By contrast, traditional car dealerships appear more cautious. Regardless of the use of terms such as “automatic cruising” and “assisted driving” or the promotion of related technologies, they all seem to be more careful.

3. Under what conditions should Autopilot be enabled?

Tesla mentioned in their public statement regarding the accident that “The Tesla autopilot function is turned off by default, and before being turned on, the driver is required to accept that this system is a new technology and in the public testing phase. The vehicle can only activate the autopilot function after that.”

Tesla mentioned in their public statement regarding the accident that “The Tesla autopilot function is turned off by default, and before being turned on, the driver is required to accept that this system is a new technology and in the public testing phase. The vehicle can only activate the autopilot function after that.”

Ignoring how many disclaimers are involved, I have only one question: Since this technology is still in the public testing phase, why not restrict its activation conditions to only enable it after it reaches maturity, instead of fully handing over control to the driver?

For instance, Tesla could restrict the activation during the car’s entry into a two-way road to avoid collisions with intersecting traffic. Similarly, it could restrict the autopilot function’s activation when navigating complex road intersections.

In addition, does Tesla warn that if Autopilot cannot be used properly, it may result in death?

In any case, this is the first case of a fatal accident involving autonomous driving. It sounds an alarm for every practitioner that we cannot rush into the application of new technologies and must be especially cautious in promoting and using new technologies. Even if the probability is extremely small, we must learn to respect every life.

Why did Tesla only announce the accident that occurred on May 7th months later on June 30th?

This responsibility for the accident is under the investigation of the National Highway Traffic Safety Administration (NHTSA); until accurate data is obtained, Tesla is not maliciously implicated.

Let’s take a look at Tesla’s accident announcement.

The accident occurred on May 7th, 2016, but it wasn’t until two months later on June 30th, after NHTSA began investigating, that Tesla publicly announced the news.

It can be inferred that Tesla’s PR team did not want to expand public influence before the matter had been fully investigated, which may have had a negative impact on the company. However, when facing such an accident that the entire industry will pay attention to, a proactive, timely, and accurate response is the official’s true attitude.

If the second fatal accident had happened before June 30th, what would Tesla do? When facing the limitations of technology, it is the responsibility of manufacturers to inform users of the risks as soon as possible.

Some users have asked Musk on Twitter why the news wasn’t announced more quickly, but there was no response.

Tesla has always been a model in the industry when it comes to innovative cases. Before moving towards greater success, there were, without doubt, many difficulties encountered. Of course, I hope to see companies that can bring change to the industry with innovative power, but a mature attitude should allow for problems to be handled more quickly, the product functionality to be conveyed more accurately, and the responsibility to be courageously undertaken.Joshua Brown did not have his hands on the steering wheel at the time of the accident. Even if Tesla was not the direct cause, it was the indirect cause. Only by acknowledging this, Tesla can do better.

This article is a translation by ChatGPT of a Chinese report from 42HOW. If you have any questions about it, please email bd@42how.com.